ARM owns the mobile market. Last year the semiconductor IP firm’s processor designs were used in some 16 billion chips, including those at the heart of nearly ever smartphone. Its Mali graphics, which hasn’t been around as long, crossed the one-billion mark in 2016, giving it around half of the phone market.

But the industry is changing. Smartphones are pushing in new directions such as VR and console-quality gaming. The rapid adoption of machine learning is spurring designs capable of running these models at the edge in everything from video cameras to self-driving cars. These applications are in turn placing steep demands on data centers, forcing companies to be more innovative with servers and networking infrastructure.

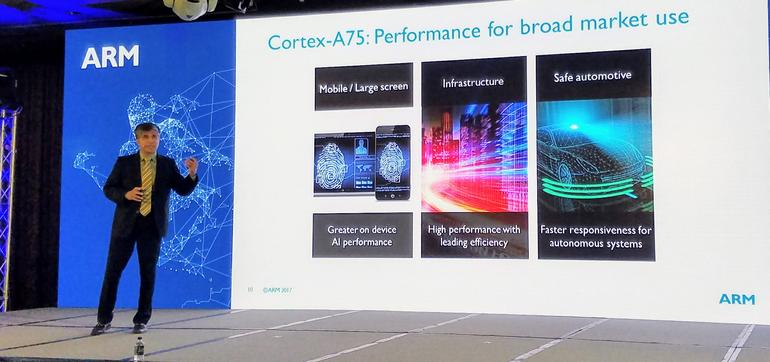

These applications require flexible, heterogeneous designs that mix CPUs, GPUs, and in some cases accelerators, with lots of memory bandwidth and specialized software instructions to deliver new levels of performance at around the same power. The new platform that ARM announced earlier today is designed to deliver this “total compute” with the Cortex-A75 for high performance compute, Cortex-A55 for power efficiency, and Mali-G72 graphics for VR, gaming and machine learning.

“ARM is changing to address how compute is changing every day all around us,” said Jem Davies, Vice President and General Manager of the Media Processing Group at the company’s press conference on the eve of Computex in Taiwan.

The announcement comes one year to the day after ARM announced the Cortex-A73, its first design based on the Artemis microarchitecture. A departure from its predecessor, the Cortex-A72, with a heavier emphasis on efficiency and sustained performance, the A73 was a successful design finding its way into Qualcomm’s Snapdragon 835 (in the modified form of the Kryo 280), MediaTek Helio X30 and HiSilicon’s Kirin 960 among other high-end chips. At the same time, ARM announced the Mali-G71, its first Bifrost GPU, which is used in the Samsung Exynos 8895 that powers the Galaxy S8 and S8+ in many markets, as well as the Kirin 960 in the Huawei Mate 9.

The A75 belongs in the same family as the A73 with a relatively short, out-of-order pipeline, but ARM is clearly after higher performance here, and it has returned to wider design along the lines of the A72 capable of dispatching more instructions per cycle. All things being equal, the A75 will deliver 20% better performance than the A73. But the A75 will also reach higher frequencies–up to around 3GHz on a 10nm process–delivering a 50% boost on raw integer performance and even more for floating-point and machine learning workloads, according to Nandan Nayampally, Vice President and General Manager of the Compute Product Group. “It’s a substantial uplift in performance,” he added.

The A55 is a replacement for the A53, which has been around longer. The design is similar but better branch prediction, a new cache design, and support for 16 8-bit integer operations per cycle (or eight 16-bit floating-point operations) results in up to twice the performance on the same process and frequency–or about 30% lower power for the same performance. ARM expects the A55 to be used on its own in entry-level and mid-range phones, or in a Big.Little configuration with the A75 in high-end smartphones and a wide range of other products.

Both the A75 and the A55 support ARM’s latest instruction set, ARM v8.2, which includes an enhanced memory model for 32- and 64-bit operation, half-precision floating point data processing, RAS (reliability availability serviceability) features for enterprise applications, and the new Scalable Vector Extension for high-performance computing. They are also ARM’s first processors to support its recently-announced Dynamiq clustering technology, which supports up to 8 CPUs per cluster with a new unified L3 cache in a Dynamiq Shared Unit (DSU) shared across all cores. More interesting, the cluster can contain any combination of CPUs (with different power, frequency and area) with independent supply voltage and power rails for individual cores or groups of cores.

For phones that means customers can use a variety of combinations including four Big A75 cores and four Little A55 cores, eight A55 cores, or even a single A75 and seven Little A55 cores–and get much better performance than current solutions in about the same power and area. The increased performance and flexibility also means it is suitable for a wide variety of other devices too. This includes laptops running Windows 10, Chrome OS or something else. Nayampally said that some countries (read: China) have expressed a lot of interest in building their own hardware and software. ARM is also emphasizing its potential in servers using multiple A75 clusters and its CoreLink coherent mesh network. “It’s very attractive for network infrastructure, [and] it’s very attractive for the data center,” Nayampally said.

Along with the new CPU designs, ARM announced the next version of its Bifrost GPU family the Mali-G72, which it said delivers 40% better performance in a smaller area and power budget. The company said that the casual gaming market is shifting especially in China to more photorealistic games on mobile, such as Digital Legends’ Afterpulse first-person shooter. The Mali-G72 has performance and efficiency optimizations that reduce bandwidth and make these kinds of games feasible. VR ups the ante further because the system needs to render two images (one for each eye) and the G72 includes several technologies that reduce overhead and increase quality–including 8x or 16x Multi Sample Anti-Aliasing–for mobile VR.

The biggest trend, however, is artificial intelligence and ARM devoted much of today’s press conference to new hardware and software features for machine learning. To be clear, ARM isn’t talking about training deep learning models where much larger and more power-hungry processors such as Nvidia’s Tesla GPUs and Google’s latest Tensor Processing Unit dominate. In fact, Nayampally said an accelerator isn’t even on the roadmap. Rather ARM is focused on SoCs that can run these models (inferencing) at the edge. This is especially important in applications where low latency is critical such as self-driving vehicles.

The recently-released ARM Compute Library is a set of low-level software instructions for running AI workloads such as image processing, computer vision and machine learning on Cortex-A CPUs and Midgard and Bifrost GPUs. ARM said the instructions will increase inferencing by 10 to 15 times on the CPU alone. The Mali-G72 GPU includes math optimizations and lager caches that deliver 17% better performance than the current G71 on the GEMM (General Matrix to Matrix Multiplication) that makes up the bulk of neural networks. Overall ARM is promising that the combination of the new cores, the Dynamiq cluster architecture and memory hierarchy, and software will deliver a 50x increase in performance running machine-learning algorithms over the next three to five years.

In a departure, ARM did not announce the number of licensees or mention any of its lead partners for the A75/A55 and Mali-G72. The company explained that its customers increasingly prefer to make their own announcements. The new platform will undoubtedly result in more powerful phones. But it’s clear that ARM is aiming beyond mobile and targeting a much broader range of applications with this generation of processors, and it will be interesting to see the kinds of products that come to market starting later this year.