Tech giants are once again being urged to do more to tackle the spread of online extremism on their platforms. Leaders of the UK, France and Italy are taking time out at a UN summit today to meet with Google, Facebook and Microsoft.

This follows an agreement in May for G7 nations to take joint action on online extremism.

The possibility of fining social media firms which fail to meet collective targets for illegal content takedowns has also been floated by the heads of state. Earlier this year the German government proposed a regime of fines for social media firms that fail to meet local takedown targets for illegal content.

The Guardian reports today that the UK government would like to see the time it takes for online extremist content to be removed to be greatly speeded up — from an average of 36 hours down to just two.

That’s a considerably narrower timeframe than the 24 hour window for performing such takedowns agreed within a voluntary European Commission code of conduct which the four major social media platformed signed up to in 2016.

Now the group of European leaders, led by the UK Prime Minister Theresa May, apparently want to go even further by radically squeezing the window of time before content must be taken down — and they apparently want to see evidence of progress from the tech giants in a month’s time, when their interior ministers meet at the G7.

According to UK Home Office analysis, ISIS shared 27,000 links to extremist content in the first five months of the 2017 and, once shared, the material remained available online for an average of 36 hours. That, says May, is not good enough.

Ultimately the government wants companies to develop technology to spot extremist material early and prevent it being shared in the first place — something UK Home Secretary Amber Rudd called for earlier this year.

While, in June, the tech industry bandied together to offer a joint front on this issue, under the banner of the Global Internet Forum to Counter Terrorism (GIFCT) — which they said would collaborate on engineering solutions, sharing content classification techniques and effective reporting methods for users.

The initiative also includes sharing counterspeech practices as another string for them to publicly pluck to respond to pressure to do more to eject terrorist propaganda from their platforms.

In response to the latest calls from European leaders to enhance online extremism identification and takedown systems, a GIFCT spokesperson provided the following responsibility-distributing statement:

Combatting terrorism requires responses from government, civil society and the private sector, often working collaboratively. The Global Internet Forum to Counter Terrorism was founded to help do just this and we’ve made strides in the past year through initiatives like the Shared Industry Hash Database. We’ll continue our efforts in the years to come, focusing on new technologies, in-depth research, and best practices. Together, we are committed to doing everything in our power to ensure that our platforms are not used to distribute terrorist content.

Monika Bickert, Facebook’s director of global policy management, is also speaking at today’s meeting with European leaders — and she’s slated to talk up the company’s investments in AI technology, while also emphasizing that the problem cannot be fixed by tech alone.

“Already, AI has begun to help us identify terrorist imagery at the time of upload so we can stop the upload, understand text-based signals for terrorist support, remove terrorist clusters and related content, and detect new accounts created by repeat offenders,” Bickert was expected to say today.

“AI has tremendous potential in all these areas — but there still remain those instances where human oversight is necessary. AI can spot a terrorist’s insignia or flag, but has a hard time interpreting a poster’s intent. That’s why we have thousands of reviewers, who are native speakers in dozens of languages, reviewing content — including content that might be related to terrorism — to make sure we get it right.”

In May, following various media reports about moderation failures on a range of issues (not just online extremism), Facebook announced it would be expanding the number of human reviewers it employs — adding 3,000 to the existing 4,500 people it has working in this capacity. Although it’s not clear over what time period those additional hires were to be brought in.

But the vast size of Facebook’s platform — which passed more than two billion users in June — means even a team of 7,500 people, aided by the best AI tools that money can build, surely has forlorn hope of being able to keep on top of the sheer volume of user generated content being distributed daily on its platform.

And even if Facebook is prioritizing takedowns of extremist content (vs moderating other types of potentially problematic content), it’s still facing a staggeringly massive haystack of content to sift through, with only a tiny team of overworked (yet, says Bickert, essential) human reviewers attached to this task, at a time when political thumbscrews are being turned on tech giants to get much better at nixing online extremism — and fast.

If Facebook isn’t able to deliver the hoped for speed improvements in a month’s time it could raise awkward political questions about why it’s not able to improve its standards, and perhaps invite greater political scrutiny of the small size of its human moderation team vs the vast size of the task they have to do.

Yesterday, ahead of meeting the European leaders, Twitter released its latest Transparency Report covering government requests for content takedowns, in which it claimed some big wins in using its own in-house technology to automatically identify pro-terrorism accounts — including specifying that it had also been able to suspend the majority of these accounts (~75%) before they were able to tweet.

The company, which has only around 328M active monthly users (and inevitable a far smaller volume of content to review vs Facebook) revealed it had closed nearly 300,000 pro-terror accounts in the past six months, and said government reports of terrorism accounts had dropped 80 per cent since its prior report.

Twitter argues that terrorists have shifted much of their propaganda efforts elsewhere — pointing to messaging platform Telegram as the new tool of choice for ISIS extremists. This is a view backed up by Charlie Winter, senior research fellow at the International Center for the Study of Radicalization and Political Violence (ICSR).

Winter tells TechCrunch: “Now, there’s no two ways about it — Telegram is first and foremost the centre of gravity online for the Islamic State, and other Salafi jihadist groups. Places like Twitter, YouTube and Facebook are all way more inhospitable than they’ve ever been to online extremism.

There’s no two ways about it — Telegram is first and foremost the centre of gravity online for the Islamic State.

“Yes there are still pockets of extremists using these platforms but they are, in the grand scheme of things, and certainly compared to 2014/2015 vanishingly small.”

Discussing how Telegram is responding to extremism propaganda, he says: “I don’t think they’re doing nothing. But I think they could do more… There’s a whole set of channels which are very easily identifiable as the keynotes of Islamic State propaganda determination, that are really quite resilient on Telegram. And I think that it wouldn’t be hard to identify them — and it wouldn’t be hard to remove them.

“But were Telegram to do that the Islamic State would simply find another platform to use instead. So it’s only ever going to be a temporary measure. It’s only ever going to be reactive. And I think maybe we need to think a little bit more outside the box than just taking the channels down.”

“I don’t think it’s a complete waste of time [for the government to still be pressurizing tech giants over extremism],” Winter adds. “I think that it’s really important to have these big ISPs playing a really proactive role. But I do feel like policy or at least rhetoric is stuck in 2014/2015 when platforms like Twitter were playing a much more important role for groups like the Islamic State.”

Indeed, Twitter’s latest Transparency Report shows that the vast majority of recent government reports pertaining to its content involve complaints about “abusive behavior”. Which suggests that, as Twitter shrinks its terrorism problem, another long-standing issue — dealing with abuse on its platform — is rapidly zooming into view as the next political hot potato for it to grapple with.

Meanwhile, Telegram is an altogether smaller player than the social giants most frequently called out by politicians over online extremism — though not a tiddler by any means, announcing it had passed 100M monthly users in February 2016.

But not having a large and fixed corporate presence in any country makes the nomadic team behind the platform — led by Russian exile Pavel Durov, its co-founder — an altogether harder target for politicians to wring concessions from. Telegram is simply not going to turn up to a meeting with political leaders.

That said, the company has shown itself responsive to public criticism about extremist use of its platform. In the wake of the 2015 Paris terror attacks it announced it had closed a swathe of public channels that had been used to broadcast ISIS-related content.

It has apparently continued to purge thousands of ISIS channels since then — claiming it nixed more than 8,800 this August alone, for example. Although, and nonetheless, this level of effort does not appear enough to persuade ISIS of the need to switch to another app platform with lower ‘suspension friction’ to continue spreading its propaganda. So it looks like Telegram needs to step up its efforts if it wants to ditch the dubious honor of being known as the go-to platform for ISIS et extremist al.

“Telegram is important to the Islamic State for a great many different reasons — and other Salafi jihadist group too like Al-Qaeda or Harakat Ahrar ash-Sham al-Islamiyya in Syria,” says Winter. “It uses it first and foremost… for disseminating propaganda — so whether that’s videos, photo reports, newspaper, magazine and all that. It also uses it on a more communal basis, for encouraging interaction between supporters.

“And there’s a whole other layer of it that I don’t think anyone sees really which I’m talking about in a hypothetical sense because I think it would be very difficult to penetrate where the groups will be using it for more operational things. But again, without being in an intelligence service, I don’t think it’s possible to penetrate that part of Telegram.

“And there’s also evidence to suggest that the Islamic State actually migrates onto even more heavily encrypted platforms for the really secure stuff.”

Responding to the expert view that Telegram has become the “platform of choice for the Islamic State”, Durov tells TechCrunch: “We are taking down thousands of terrorism-related channels monthly and are constantly raising the efficiency of this process. We are also open to ideas on how to improve it further, if… the ICSR has specific suggestions.”

As Winter hints, there’s also terrorist chatter concerning governments that takes place out of the public view — on encrypted communication channels. And this is another area where the UK government especially has, in recent years, ramped up political pressure on tech giants (for now European lawmakers appear generally more hesitant to push for a decrypt law; while the U.S. has seen attempts to legislate but nothing has yet come to pass on that front).

End-to-end encryption still under pressure

A Sky News report yesterday, citing UK government sources, claimed that Facebook-owned WhatsApp had been asked by British officials this summer to come up with technical solutions to allow them to access the content of messages on its end-to-end encrypted platform to further government agencies’ counterterrorism investigations — so, effectively, to ask the firm to build a backdoor into its crypto.

This is something the UK Home Secretary, Amber Rudd, has explicitly said is the government’s intention. Speaking in June she said it wanted big Internet firms to work with it to limit their use of e2e encryption. And one of those big Internet firms was presumably WhatsApp.

WhatsApp apparently rejected the backdoor demand put to it by the government this summer, according to Sky’s report.

We reached out to the messaging giant to confirm or deny Sky’s report but a WhatsApp spokesman did not provide a direct response or any statement. Instead he pointed us to existing information on the company’s website — including an FAQ in which it states: “WhatsApp has no ability to see the content of messages or listen to calls on WhatsApp. That’s because the encryption and decryption of messages sent on WhatsApp occurs entirely on your device.”

He also flagged up a note on its website for law enforcement which details the information it can provide and the circumstances in which it would do so: “A valid subpoena issued in connection with an official criminal investigation is required to compel the disclosure of basic subscriber records (defined in 18 U.S.C. Section 2703(c)(2)), which may include (if available): name, service start date, last seen date, IP address, and email address.”

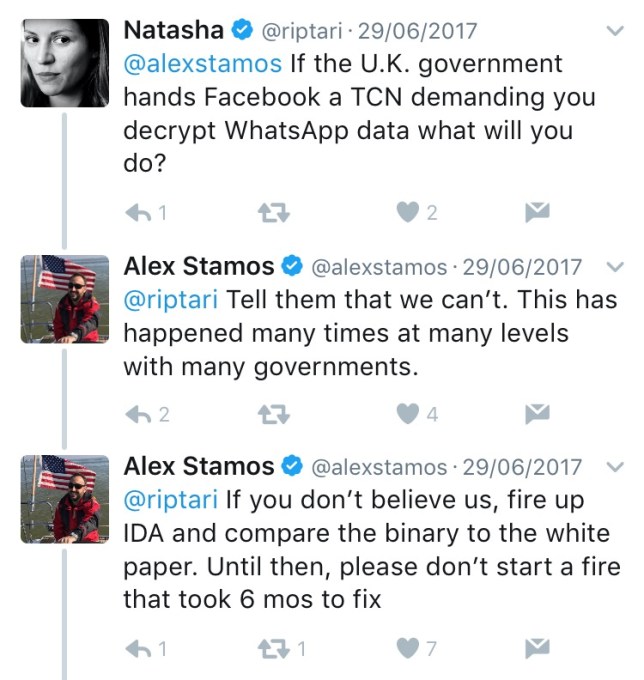

Facebook CSO Alex Stamos also previously told us the company would refuse to comply if the UK government handed it a so-called Technical Capability Notice (TCN) asking for decrypted data — on the grounds that its use of e2e encryption means it does not hold encryption keys and thus cannot provide decrypted data — though the wider question is really how the UK government might then respond to such a corporate refusal to comply with UK law.

Properly implemented e2e encryption ensures that the operators of a messaging platform cannot access the contents of the missives moving around the system. Although e2e encryption can still leak metadata — so it’s possible for intelligence on who is talking to whom and when (for example) to be passed by companies like WhatsApp to government agencies.

Facebook has confirmed it provides WhatsApp metadata to government agencies when served a valid warrant (as well as sharing metadata between WhatsApp and its other business units for its own commercial and ad-targeting purposes).

Talking up the counter-terror potential of sharing metadata appears to be the company’s current strategy for trying to steer the UK government away from demands it backdoor WhatsApp’s encryption — with Facebook’s Sheryl Sandberg arguing in July that metadata can help inform governments about terrorist activity.

In the UK successive governments have been ramping up political pressure on the use of e2e encryption for years — with politicians proudly declaring themselves uncomfortable with rising use of the tech. While domestic surveillance legislation passed at the end of last year has been widely interpreted as giving security agencies powers to place requirements on companies not to use e2e encryption and/or to require comms services providers to build in backdoors so they can provide access to decrypted data when handed a state warrant. So, on the surface, there’s a legal threat to the continued viability of e2e encryption in the UK.

However the question of how the government could seek to enforce decryption on powerful tech giants, which are mostly headquartered overseas, have millions of engaged local users and sell e2e encryption as a core part of their proposition, is unclear. Even with the legal power to demand it, they’d still be asking for legible data from owners of systems designed not to enable third parties to read that data.

One crypto expert we contacted for comment on the conundrum, who cannot be identified because they were not authorized to speak to the press by their employer, neatly sums up the problem for politicians squaring up to tech giants using e2e encryption: “They could close you down but do they want to? If you aren’t keeping records, you can’t turn them over.”

It’s really not clear how long the political compass will keep swinging around and pointing at tech firms to accuse them of building systems that are impeding governments’ counterterrorism efforts — whether that’s related to the spread of extremist propaganda online, or to a narrower consideration like providing warranted access to encrypted messages.

As noted above, the UK government legislated last year to enshrine expansive and intrusive investigatory powers in a new framework, called the Investigatory Powers Act — which includes the ability to collect digital information in bulk and for spy agencies to maintain vast databases of personal information on citizens who are not (yet) suspected of any wrongdoing in order that they can sift these records when they choose. (Powers that are incidentally being challenged under European human rights law.)

And with such powers on its statute books you’d hope there would be more pressure for UK politicians to take responsibility for the state’s own intelligence failures — rather than seeking to scapegoat technologies such as encryption. But the crypto wars are apparently, sad to say, a neverending story.

On extremist propaganda, the co-ordinated political push by European leaders to get tech platforms to take more responsibility for user generated content which they’re freely distributing, liberally monetizing and algorithmically amplifying does at least have more substance to it. Even if, ultimately, it’s likely to be just as futile a strategy for fixing the underlying problem.

Because even if you could wave a magic wand and make all online extremist propaganda vanish you wouldn’t have fixed the core problem of why terrorist ideologies exist. Nor removed the pull that those extremist ideas can pose for certain individuals. It’s just attacking the symptom of a problem, rather than interrogating the root causes.

The ICSR’s Winter is generally downbeat on how the current political strategy for tackling online extremism is focusing so much attention on restricting access to content.

“[UK PM] Theresa May is always talking about removing the safe spaces and shutting down part of the Internet were terrorists exchange instructions and propaganda and that sort of stuff, and I just feel that’s a Sisyphean task,” he tells TechCrunch. “Maybe you do get it to work on any one platform they’re just going to go onto a different one and you’ll have exactly the same sort of problem all over again.

“I think they are publicly making too much of a thing out of restricting access to content. And I think the role that is being described to the public that propaganda takes is very, very different to the one that it actually has. It’s much more nuanced, and much more complex than simply something which is used to “radicalize and recruit people”. It’s much much more than that.

“And we’re clearly not going to get to that kind of debate in a mainstream media discourse because no one has the time to hear about all the nuances and complexities of propaganda but I do think that the government puts too much emphasis on the online space — in a manner that is often devoid of nuance and I don’t think that is necessarily the most constructive way to go about this.”