Google is hard at work behind the scenes improving one of its ambitious technical projects ever – Street View. The company previously revealed that its been rolling out improved camera cars with better photographic equipment to improve the quality and resolution of images that make up its street-level views in Google Maps, but it’s also fixing the sometimes messy stitching that occurs when it combines pictures from its multi-camera “rosettes.”

These so-called rosettes are those camera balls you see sitting atop the colorful Google Street View cars – they contain 15 independent camera sensors, each with their own sense, which are constantly taking images as they shuttle around streets. Software handles stitching those images together so that you can use Street View to virtually ‘step into’ any scene from anywhere the cars operate to get a frozen-in-time glimpse at what that spot would look like from a pedestrian’s perspective.

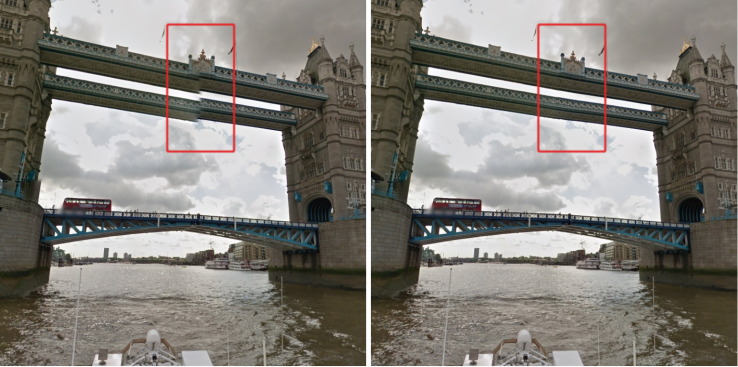

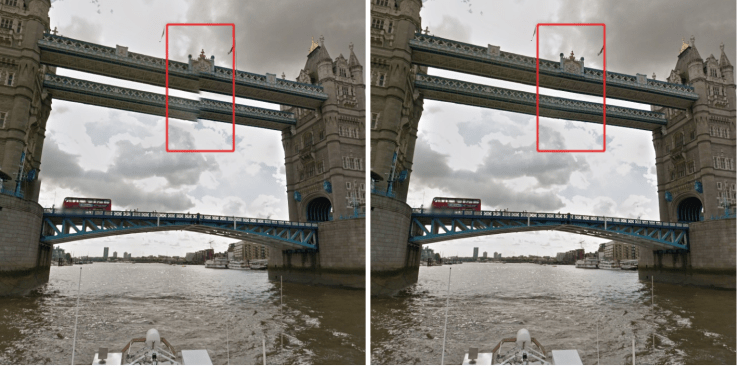

Or, almost what it would look like; one thing you’ve probably noticed if you’ve spent any time in Street View is that the stitch points, or places where the multiple images captured by the rosette’s 15 cameras, are often painfully obvious. This is not a problem that’s unique to Google, and it appears in a lot of panorama image stitching, in smartphones, consumer cameras, VR video capture and more.

Google still manages to be pretty good at making up for these deficiencies such that you aren’t often terribly aware of the overlap points between images, but it’s also now rolling out a new algorithm that makes things even more, well, seamless. Basically, the process uses any overlapping areas to locate pixels that correspond directly to one another in each image, and then it simplifies that data set, eliminating any corresponding points where there isn’t enough visual structural data (like a building edge, for instance) to accurately calculate the flow from one image to the other.

The challenge is that Google’s algorithm has to do this while keeping the rest of the image looking ‘normal,’ or appealing to our natural human sensibilities. You can very quickly tell when looking at images when things don’t look quite right, even if you can’t put your finger on why, and sometime warping a picture to achieve a desired in one area can have a dramatic effect on other elements in the image.

Google’s technique specifically avoids introducing new visual issue while selectively warming the crossover areas of stitched images, to produce smooth, continuous panoramas that still look accurate across the frame. It produces some amazing results, as you can see in the video above and the gallery below.

Google is using this to restitch panoramas right now, but there are obviously a lot of panoramas to restitch in the whole of Street View, so don’t be surprised if you still find some awkward transitions out there. One day, though, we could virtually tour the world without any odd imaging artifacts.