Google has confirmed a major policy shift in how it approaches extremist content on YouTube. A spokeswoman told us it has broadened its policy for taking down extremist content: Not just removing videos that directly preach hate or seek to incite violence but also removing other videos of named terrorists, unless the content is journalistic or educational in nature — such as news reports and documentaries.

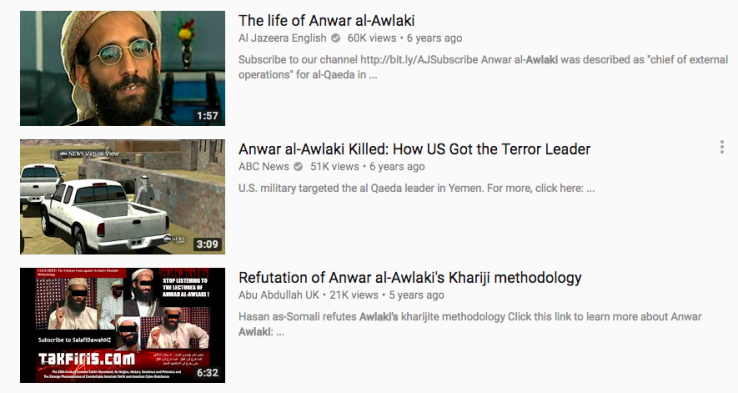

The change was reported earlier by Reuters, following a report by the New York Times on Monday saying YouTube had drastically reduced content showing sermons by jihadist cleric, Anwar al-Awlaki — eliminating videos where the radical cleric is not directly preaching hate but talking on various, ostensibly non-violent topics.

al-Awlaki was killed in a US drone strike six years ago but has said to have remained the leading English-language jihadist recruiter because of there being such an extensive and easily accessible digital legacy of his sermons.

In a phone call with TechCrunch a YouTube spokeswoman confirmed that around 50,000 videos of al-Awlaki’s lectures have been removed at this point.

There is still al-Awlaki content on YouTube — and the spokeswoman stressed there will never be zero videos returned for a search for his name. But said the aim is to remove content created by known extremists and disincentivize others from reuploading the same videos.

Enacting the policy will be an ongoing process, she added.

She said the policy change has come about as a result of YouTube working much more closely with a network of NGO experts working in this space and also participating in its community content policing trusted flaggers program — who have advised it that even sermons that do not ostensibly preach hate can be part of a wider narrative used by jihadi extremists to radicalize and recruit.

This year YouTube and other user generated content platforms have also come under increasing political pressure to take a tougher stance on extremist content. While YouTube has also faced an advertiser backlash when ads were found being displayed alongside extremist content.

In June the company announced a series of measures aimed at expanding its efforts to combat jihadi propaganda — including expanding its use of AI tech to automatically identify terrorist content; adding 50 “expert NGOs” to its trusted flagger program; and growing counter-radicalization efforts — such as returning content which deconstructs and debunks jihadist views when a user searches for certain extremist trigger words.

However, at that time, YouTube rowed back from taking down non-violent extremist content. Instead it said it would display interstitial warnings on videos that contain “inflammatory religious or supremacist content”, and also remove the ability of uploaders to monetize this type of content.

“We think this strikes the right balance between free expression and access to information without promoting extremely offensive viewpoints,” said Google SVP and general counsel, Kent Walker, at the time.

Evidently it’s now decided it was not, in fact, striking the right balance by continuing to host and provide access to sermons made by extremists. And has now redrawn its policy line to shrink access to anything made by known terrorists.

According to YouTube’s spokeswoman, it’s working off of government lists of named terrorists and foreign terrorist organizations to identify individuals for whom the wider takedown policy will apply.

She confirmed that all content currently removed under the new wider policy pertains to al-Awlaki. But the idea is for this to expand to takedowns of other non-violent videos from other listed extremists.

It’s not clear whether these lists are public at this point. The spokeswoman indicated it’s YouTube’s expectation they will be public, and said the company will communicate with government departments on that transparency point.

She said YouTube is relying on its existing moderating teams to enact the expanded policy, using a mix of machine learning technology to help identify content and human review to understand the context.

She added that YouTube has been thinking about adapting its policies for extremist content for more than a year — despite avoiding taking this step in June.

She also sought to play down the policy shift as being a response to pressure from governments to crack down on online extremism — saying rather it’s come about as a result of YouTube engaging with and listening to experts.