As part of its rather bizarre news dump before its flagship Build developer conference next week, Microsoft today announced a slew of new pre-built machine learning models for its Cognitive Services platform. These include an API for building personalization features, a form recognizer for automating data entry, a handwriting recognition API and an enhanced speech recognition service that focuses on transcribing conversations.

Maybe the most important of these new services is the Personalizer. There are few apps and web sites, after all, that aren’t looking to provide their users with personalized features. That’s difficult, in part, because it often involves building models based on data that sits in a variety of silos. With Personalizer, Microsoft is betting on reinforcement learning, a machine learning technique that doesn’t need the kind of labeled training data typically used in machine learning. Instead, the reinforcement agent constantly tries to find the best way to achieve a given goal based on what users do. Microsoft argues that it is the first company to offer a service like this and the company itself has been testing the services on its Xbox, where it saw a 40% increase in engagement with its content after it implemented this service.

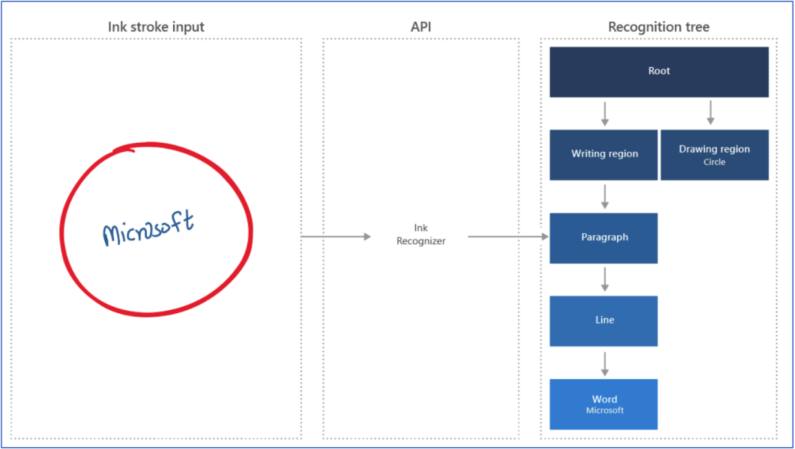

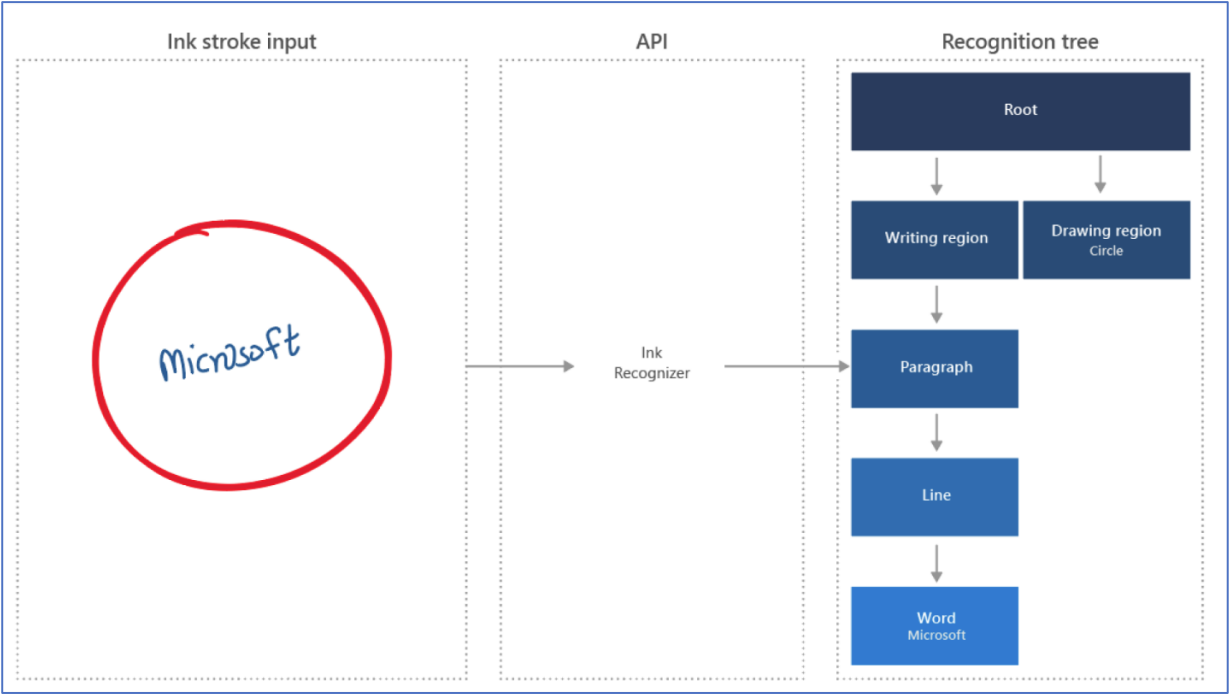

The handwriting recognition API, or Ink Recognizer as it is officially called, can automatically recognize handwriting, common shapes and documents. That’s something Microsoft has long focused on as it developed its Windows 10 inking capabilities, so maybe it’s no surprise that it is now packaging this up as a cognitive service, too. Indeed, Microsoft Office 365 and Windows use exactly this service already, so we’re talking about a pretty robust system. With this new API, developers can now bring these same capabilities to their own applications, too.

Conversation Transcription does exactly what the name implies: it transcribes conversations and it’s part of Microsoft’s existing speech-to-text features in the Cognitive Services lineup. It can label different speakers, transcribe the conversation in real time and even handle crosstalk. It already integrates with Microsoft Teams and other meeting software.

Also new is the Form Recognizer, a new API that makes it easier to extract text and data from business forms and documents. This may not sound like a very exciting feature, but it solves a very common problem and the service needs only five samples to understand how to extract data and users don’t have to do any of the arduous manual labeling that’s often involved in building these systems.

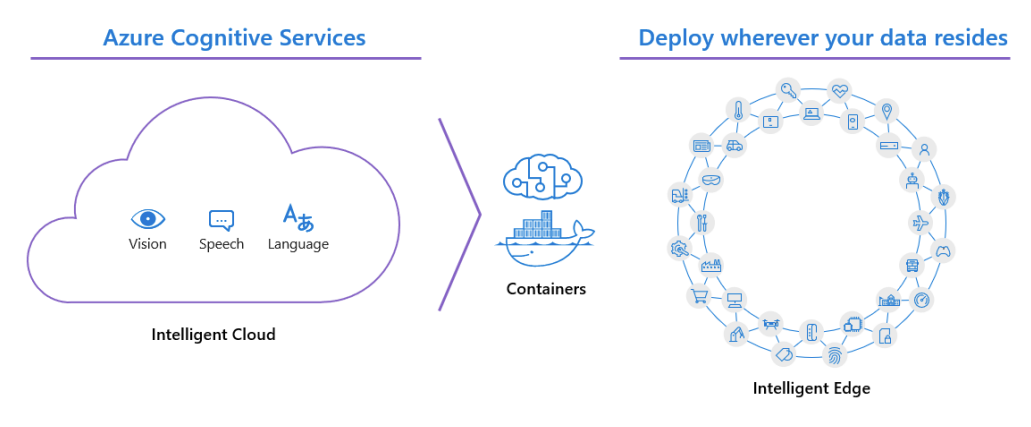

Form Recognizer is also coming to cognitive services containers, which allow developers to take these models outside of Azure and to their edge devices. The same is true for the existing speech-to-text and text-to-speech services, as well as the existing anomaly detector.

In addition, the company also today announced that its Neural Text-to-Speech, Computer Vision Read and Text Analytics Named Entity Recognition APIs are now generally available.

Some of these existing services are also getting some feature updates, with the Neural Text-to-Speech service now supporting five voices, while the Computer Vision API can now understand more than 10,000 concepts, scenes and objects, together with 1 million celebrities, compared to 200,000 in a previous version (are there that many celebrities?).