It was not long ago that the world watched World Chess Champion Garry Kasparov lose a decisive match against a supercomputer. IBM’s Deep Blue embodied the state of the art in the late 1990s, when a machine defeating a world (human) champion at a complex game such as chess was still unheard of.

Fast-forward to today, and not only have supercomputers greatly surpassed Deep Blue in chess, they have managed to achieve superhuman performance in a string of other games, often much more complex than chess, ranging from Go to Dota to classic Atari titles.

Many of these games have been mastered just in the last five years, pointing to a pace of innovation much quicker than the two decades prior. Recently, Google released work on Agent57, which for the first time showcased superior performance over existing benchmarks across all 57 Atari 2600 games.

The class of AI algorithms underlying these feats — deep-reinforcement learning — has demonstrated the ability to learn at very high levels in constrained domains, such as the ones offered by games.

The exploits in gaming have provided valuable insights (for the research community) into what deep-reinforcement learning can and cannot do. Running these algorithms has required gargantuan compute power as well as fine-tuning of the neural networks involved in order to achieve the performance we’ve seen.

Researchers are pursuing new approaches such as multi-environment training and the use of language modeling to help enable learning across multiple domains, but there remains an open question of whether deep-reinforcement learning takes us closer to the mother lode — artificial general intelligence (AGI) — in any extensible way.

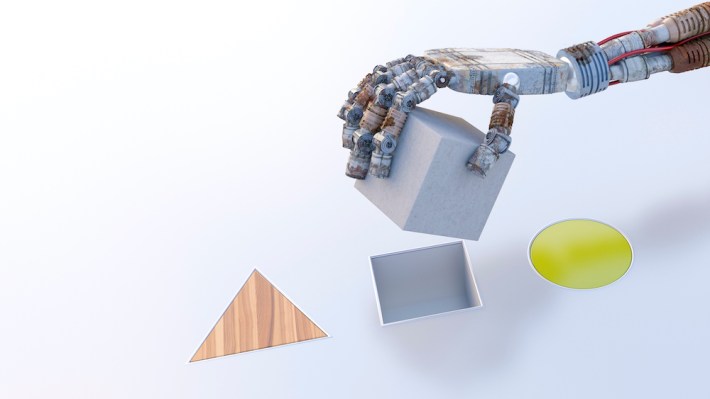

While the talk of AGI can get quite philosophical quickly, deep-reinforcement learning has already shown great performance in constrained environments, which has spurred its use in areas like robotics and healthcare, where problems often come with defined spaces and rules where the techniques can be effectively applied.

In robotics, it has shown promising results in using simulation environments to train robots for the real world. It has performed well in training real-world robots to perform tasks such as picking and how to walk. It’s being applied to a number of use cases in healthcare, such as personalized medicine, chronic care management, drug discovery and resource scheduling and allocation. Other areas that are seeing applications have included natural language processing, computer vision, algorithmic optimization and finance.

The research community is still early in fully understanding the potential of deep-reinforcement learning, but if we are to go by how well it has done in playing games in recent years, it’s likely we’ll be seeing even more interesting breakthroughs in other areas shortly.

So what is deep-reinforcement learning?

If you’ve ever navigated a corn maze, your brain at an abstract level has been using reinforcement learning to help you figure out the lay of the land by trial and error, ultimately leading you to find a way out.