Nvidia is no newcomer to the car business. But this was the first year that the chipmaker–better known for developing GeForce GPUs for desktop gaming–chose to focus almost exclusively on automotive technology at CES.

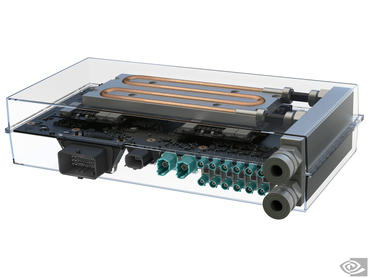

At the show, Nvidia did announce its first GPU using more advanced process technology, specifically TSMC’s 16nm FinFET+ process, but it wasn’t the high-end GeForce GPU we were expecting. Instead the company announced a new Drive PX 2 module, which CEO Jen-Hsun Huang described as the “the world’s first in-car supercomputer for artificial intelligence.” The module includes two CPUs and two 16FF+ next-generation Pascal GPUs. During Nvidia’s CES press conference, Huang also went into a lot of detail on the company’s development of a deep-learning platform for handling the complex tasks required to enable autonomous driving.

Unlike the first Drive PX, which was announced at last year’s CES and based on the Tegra X1 SoC developed for mobile devices, the Drive PX 2 uses both SoCs and discrete GPUs to deliver much higher performance, albeit at higher power. Each of the SoCs has four ARM Cortex-A57 CPUs and two of Nvidia’s custom 64-bit “Denver” ARM CPU cores, as well as a Pascal GPU. On the flip side, the Drive PX 2 board also includes two discrete Pascal GPUs, which appear to be attached using MXM connectors as in laptops. For now Nvidia isn’t providing many details about the SoC or Pascal GPUs, but it is promising significant performance gains from the combined 12 CPU cores and eight GPUs.

Specifically, Nvidia says that the Drive PX 2 will deliver 8 teraflops, or one trillion single-precision 32-bit floating-point (FP32) operations per second. In comparison, Nvidia’s fastest single desktop GPU, the Maxwell-based Titan X, is capable of 7 teraflops FP32. However most deep-learning models do not require this level of precision, and the Drive PX 2 includes a new set of instructions designed specifically for these operations. As a result, Nvidia says it will be capable of handling 24 trillion deep-learning operations per second (something Huang dubbed “teraops”) or six times the “deep-learning throughput” of the Titan X.

To achieve this kind of performance, the Drive PX 2 is also using desktop-like power–specifically around 250 watts despite the more efficient 16nm FinFET transistors. The module relies on liquid-cooling to operate in a demanding car environment, but Nvidia said it will offer an optional heat transfer unit for vehicles that are not designed with water cooling. Many competing solutions–including the current Drive PX–use a fraction of the power. For example, Imagination’s Alex Voica told me at CES that chips based on its designs from top automotive players such as Renesas and Texas Instruments can deliver one teraflop using as little as three to four watts of power. Others such as Ceva, Cadence’s Tensilica, and Freescale/NXP have been talking about using DSPs and other fixed-function hardware to handle computer vision tasks in milliwatts.

But Nvidia argues that the “very hard” problem of autonomous driving in real-world conditions will require much higher performance. The DRIVE PX 2 can also process the inputs of up to 12 video cameras, plus lidar, radar and ultrasonic sensors; put it all together to identify objects; and calculate the best path in real-time. All together it can process 2,800 images per second versus 450 images per second for Titan X.

This sounds promising but it won’t amount to much if carmakers can’t develop the technology and build it into vehicles. Huang said that Nvidia’s strategy is to work with the ecosystem to optimize its GPUs for deep-learning frameworks such as Caffe, Theano and Torch; accelerate deep-learning everywhere from the cloud to cars; and develop an end-to-end platform to train and deploy deep learning applications and continuously enhance them.

The first component is DIGITS, which is Nvidia’s existing tool for developing and training deep neural networks. The use of GPUs such as Titan X has cut the training time on large data sets from months to days, making it possible to train complex neural networks on very large training sets. These models can run on Drive PX 2 or any other Nvidia GPU-based system. Huang compared this breakthrough in deep learning to the Internet and mobile computing, and said it will give superhuman perception capabilities.

At the press conference, Nvidia talked about two other tools designed to harness this power for self-driving cars:

DriveWorks is a set of software tools and libraries to develop and test the hardware and software for autonomous vehicles. Nvidia demonstrated how to use DriveWorks in a vehicle to take the output from four LIDARs and six cameras to figure out how the car is moving in space, detect objects around it, and plot a safe course.

Finally there’s DriveNet, Nvidia’s reference neural network. Huang went into lots of detail on how Nvidia trained DriveNet using data from ImageNet and Daimler to teach it how to detect objects and classify all of the pixels associated with each object in order to recognize multiple objects. It then retained the model “overnight” using a more challenging data set from Audi (for example, driving in the snow when the lanes and other cars are not clearly visible) and it was able to recognize objects that were difficult to see with the human eye.

This ability to continually refine the models over time will be critical. Cars will leave the lots with superhuman capabilities, Huang said, but as they encounter new situations they do not recognize, they will send the data up to the cloud, where it will be used to retrain models, which will be pushed back down to all vehicles making them smarter and safer. “There’s so much more to do. Not only do we want to recognize objects, we want to recognize circumstances,” Huang said. “You are now using compute, which is relatively cheap, as opposed to engineering time.” Digital assistants such as Apple’s Siri, Google Now and Microsoft Cortana have become much more powerful over time by using this same technique.

Audi, BMW, Daimler, Fanuc, Ford, AI-start-up Preferred Networks, Toyota and robotics company ZMP (which is trying to develop autonomous taxis in time for the Olympics in Japan) are all using Nvidia’s deep-learning technology. The DRIVE PX 2 will be available in the fourth quarter and Volvo plans to install it in 100 cars starting in 2017 as part of a trial of autonomous driving in Göteborg, Sweden.

Although the rumored Ford and Google partnership failed to materialize, there were many other autonomous driving announces in Las Vegas this week. Mercedes announced that its new E-Class, set to debut at the Detroit Auto Show in a few days, will be the first “standard-production vehicle” authorized for autonomous driving on public roads in Nevada (some Tesla Model S sedans already have self-driving capabilities). Kia said it is also set to begin testing in Nevada using a Soul EV with a collection of ADAS (Advanced Driver Assistance Systems) technology that is part of its DRIVE WISE plan to make its cars partially autonomous by 2020 and fully-autonomous by 2030. Chevrolet, Ford and Toyota also talked about ongoing development of self-driving cars.

BlackBerry’s QNX subsidiary announced a new platform designed to make it easier for carmakers to add ADAS features. Finally start-up Quanergy demonstrated a prototype solid-state LIDAR system that it claims will slash the cost of these 360-degree sensors from $70,000 to $1,000 per car when it reaches volume production in 2017.