Facebook this morning announced it will increase the penalties against its rule-breaking Facebook Groups and their members, alongside other changes designed to reduce the visibility of groups’ potentially harmful content. The company says it will now remove civic and political groups from its recommendations in markets outside the U.S., and will further restrict the reach of groups and members who continue to violate its rules.

The changes follow what has been a steady, but slow and sometimes ineffective crackdown on Facebook Groups that produce and share harmful, polarizing or even dangerous content.

Ahead of the U.S. elections, Facebook implemented a series of new rules designed to penalize those who violated its Community Standards or spread misinformation via Facebook Groups. These rules largely assigned more responsibility to Groups themselves, and penalized individuals who broke rules. Facebook also stopped recommending health groups, to push users to official sources for health information, including for information about Covid-19.

This January, Facebook made a more significant move against potentially dangerous groups. It announced it would remove civic and political groups, as well as newly created groups, from its recommendations in the U.S. following the insurrection at the U.S. Capitol on Jan. 6, 2021. (Previously, it had temporarily limited these groups ahead of the U.S. elections.)

As The WSJ reported when this policy became permanent, Facebook’s internal research had found that Facebook groups in the U.S. were polarizing users and inflaming the calls for violence that spread after the elections. The researchers said roughly 70% of the top 100 most active civic Facebook Groups in the U.S. had issues with hate, misinformation, bullying and harassment that should make them non-recommendable, leading to the January 2021 crackdown.

Today, that same policy is being rolled out to Facebook’s global user base, not just Facebook U.S. users.

That means in addition to health groups, users worldwide won’t be “recommended” civic or political groups when browsing Facebook. It’s important, however, to note that recommendations are only one of many ways users find Facebook Groups. Users can also find them in search, through links people post, through invites and friends’ private messages.

In addition, Facebook says groups that have gotten in trouble for violating Facebook’s rules will now be shown lower in recommendations — a sort of downranking penalty Facebook often uses to reduce the visibility of News Feed content.

The company will also increase the penalties against rule-violating groups and their individual members through a variety of other enforcement actions.

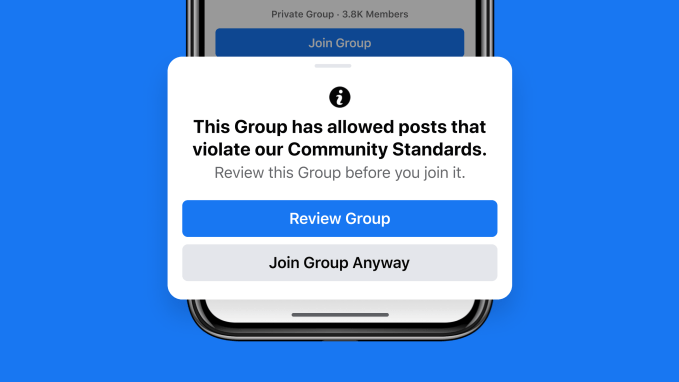

Image Credits: Facebook

For example, users who attempt to join groups that have a history of breaking Facebook’s Community Standards will be alerted to the the group’s violations through a warning message (shown above), which may cause the user to reconsider joining.

The rule-violating groups will have their invite notifications limited, and current members will begin to see less of the groups’ content in their News Feed, as the content will be shown further down. These groups will also be demoted in Facebook’s recommendations.

When a group hosts a substantial number of members who have violated Facebook policies or participated in other groups that were shut down for Facebook Community Standards violations, the group itself will have to temporarily approve all members’ new posts. And if the admin or moderator repeatedly approves rule-breaking content, Facebook will then take the entire group down.

This rule aims to address problems around groups that re-form after being banned, only to restart their bad behavior unchecked.

The final change being announced today applies to group members.

When someone has repeated violations in Facebook Groups, they’ll be temporarily stopped from posting or commenting in any group, won’t be allowed to invite others to join groups, and won’t be able to create new groups. This measure aims to slow down the reach of bad actors, Facebook says.

The new policies give Facebook a way to more transparently document a group’s bad behavior that led to its final shutdown. This “paper trail,” of sorts, also helps Facebook duck accusations of bias when it comes to its enforcement actions — a charge often raised by Facebook critics on the right, who believe social networks are biased against conservatives.

But the problem with these policies is that they’re still ultimately hand slaps for those who break Facebook’s rules — not all that different from what users today jokingly refer to as “Facebook jail“. When individuals or Facebook Pages violate Facebook’s Community Standards, they’re temporarily prevented from interacting on the site or using specific features. Facebook is now trying to replicate that formula, with modifications, for Facebook Groups and their members.

There are other issues, as well. For one, these rules rely on Facebook to actually enforce them, and it’s unclear how well it will be able to do so. For another, they ignore one of the key means of group discovery: search. Facebook claims it downranks low-quality results here, but results of its efforts are decidedly mixed.

For example, though Facebook made sweeping statements about banning QAnon content across its platform in a misinformation crackdown last fall, it’s still possible to search for and find QAnon-adjacent content — like groups that aren’t titled QAnon but cater to QAnon-styled “patriots” and conspiracies).

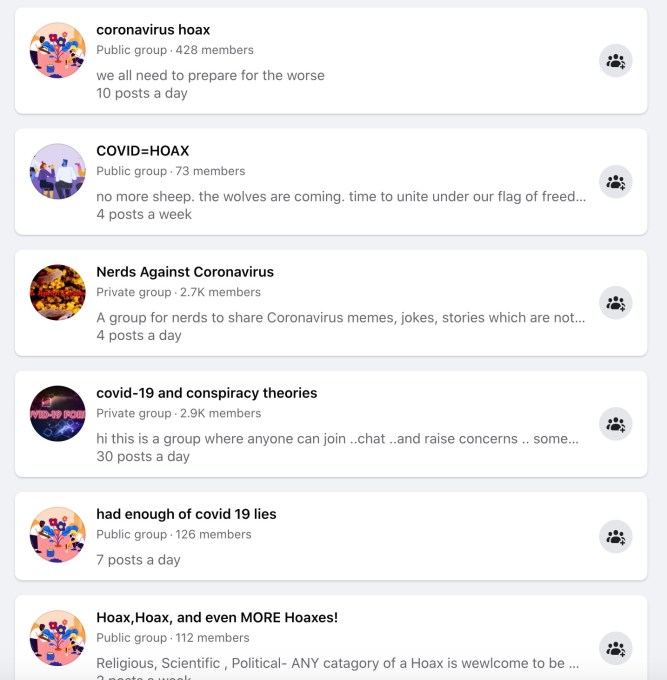

Similarly, searches for terms like “antivax” or “covid hoax,” can also direct users to problematic groups — like the one for people who “aren’t anti-vax in general,” but are “just anti-RNA,” the group’s title explains; or the “parents against vaccines” group; or the “vaccine haters” group that proposes it’s spreading the “REAL vaccine information.” (We surfaced these on Tuesday, ahead of Facebook’s announcement.)

Cleary, these are not official health resources, and would not otherwise be recommended per Facebook policies — but are easy to surface through Facebook search. The company, however, takes stronger measures against Covid-19 and Covid vaccine misinformation — it says it will remove Pages, groups, and accounts that repeatedly shared debunked claims, and otherwise downranks them.

Facebook, to be clear, is fully capable of using stronger technical means of blocking access to content.

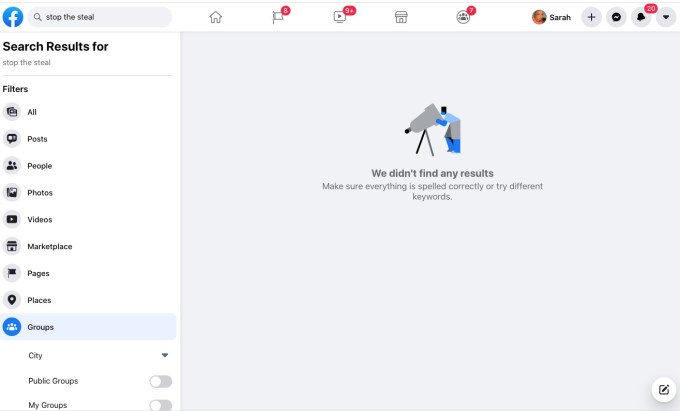

It banned “stop the steal” and other conspiracies following the U.S. elections, for example. And even today, a search for “stop the steal” groups simply returns a blank page saying no results were found.

Image Credits: Facebook fully blocks “stop the steal”

So why should a search for a banned topic like “QAnon” return anything at all?

Why should “covid hoax?” (see below)

Image Credits: Facebook group search results for “covid hoax”

If Facebook wanted to broaden its list of problematic search terms, and return blank pages for other types of harmful content, it could. In fact, if it wanted to maintain a block list of URLs that are known to spread false information, it could do that, too. It could prevent users from re-sharing any post that included those links. It could make those posts default to non-public. It could flag users who violate its rules repeatedly, or some subset of those rules, as users who no longer get to set their posts to public…ever.

In other words, Facebook could do many, many things if it truly wanted to have a significant impact on the spread misinformation, toxicity, polarizing and otherwise harmful content on its platform. Instead, it continues inching forward with temporary punishments and those that are often only aimed at “repeated” violations, such as the ones announced today. These are, arguably, more penalties than it had before — but also maybe not enough.