An artificial retina would be an enormous boon to the many people with visual impairments, and the possibility is creeping closer to reality year by year. One of the latest advancements takes a different and very promising approach, using tiny dots that convert light to electricity, and virtual reality has helped show that it could be a viable path forward.

These photovoltaic retinal prostheses come from the École polytechnique fédérale de Lausanne, where Diego Ghezzi has been working on the idea for several years now.

Early retinal prosthetics were created decades ago, and the basic idea is as follows: A camera outside the body (on a pair of glasses, for instance) sends a signal over a wire to a tiny microelectrode array, which consists of many tiny electrodes that pierce the nonfunctioning retinal surface and stimulate the working cells directly.

The problems with this are mainly that powering and sending data to the array requires a wire running from outside the eye in — generally speaking a “don’t” when it comes to prosthetics and the body in general. The array itself is also limited in the number of electrodes it can have by the size of each, meaning for many years the effective resolution in the best case scenario was on the order of a few dozen or hundred “pixels.” (The concept doesn’t translate directly because of the way the visual system works.)

Ghezzi’s approach obviates both these problems with the use of photovoltaic materials, which turn light into an electric current. It’s not so different from what happens in a digital camera, except instead of recording the charge as in image, it sends the current into the retina like the powered electrodes did. There’s no need for a wire to relay power or data to the implant, because both are provided by the light shining on it.

Image Credits: Alain Herzog / EPFL

In the case of the EPFL prosthesis, there are thousands of tiny photovoltaic dots, which would in theory be illuminated by a device outside the eye sending light in according to what it detects from a camera. Of course, it’s still an incredibly difficult thing to engineer. The other part of the setup would be a pair of glasses or goggles that both capture an image and project it through the eye onto the implant.

We first heard of this approach back in 2018, and things have changed somewhat since then, as a new paper documents.

“We increased the number of pixels from about 2,300 to 10,500,” explained Ghezzi in an email to TechCrunch. “So now it is difficult to see them individually and they look like a continuous film.”

Of course when those dots are pressed right up against the retina it’s a different story. After all, that’s only 100×100 pixels or so if it were a square — not exactly high definition. But the idea isn’t to replicate human vision, which may be an impossible task to begin with, let alone realistic for anyone’s first shot.

“Technically it is possible to make pixel smaller and denser,” Ghezzi explained. “The problem is that the current generated decreases with the pixel area.”

Current decreases with pixel size, and pixel size isn’t exactly large to begin with. Image Credits: Ghezzi et al

So the more you add, the tougher it is to make it work, and there’s also the risk (which they tested) that two adjacent dots will stimulate the same network in the retina. But too few and the image created may not be intelligible to the user. 10,500 sounds like a lot, and it may be enough — but the simple fact is that there’s no data to support that. To start on that the team turned to what may seem like an unlikely medium: VR.

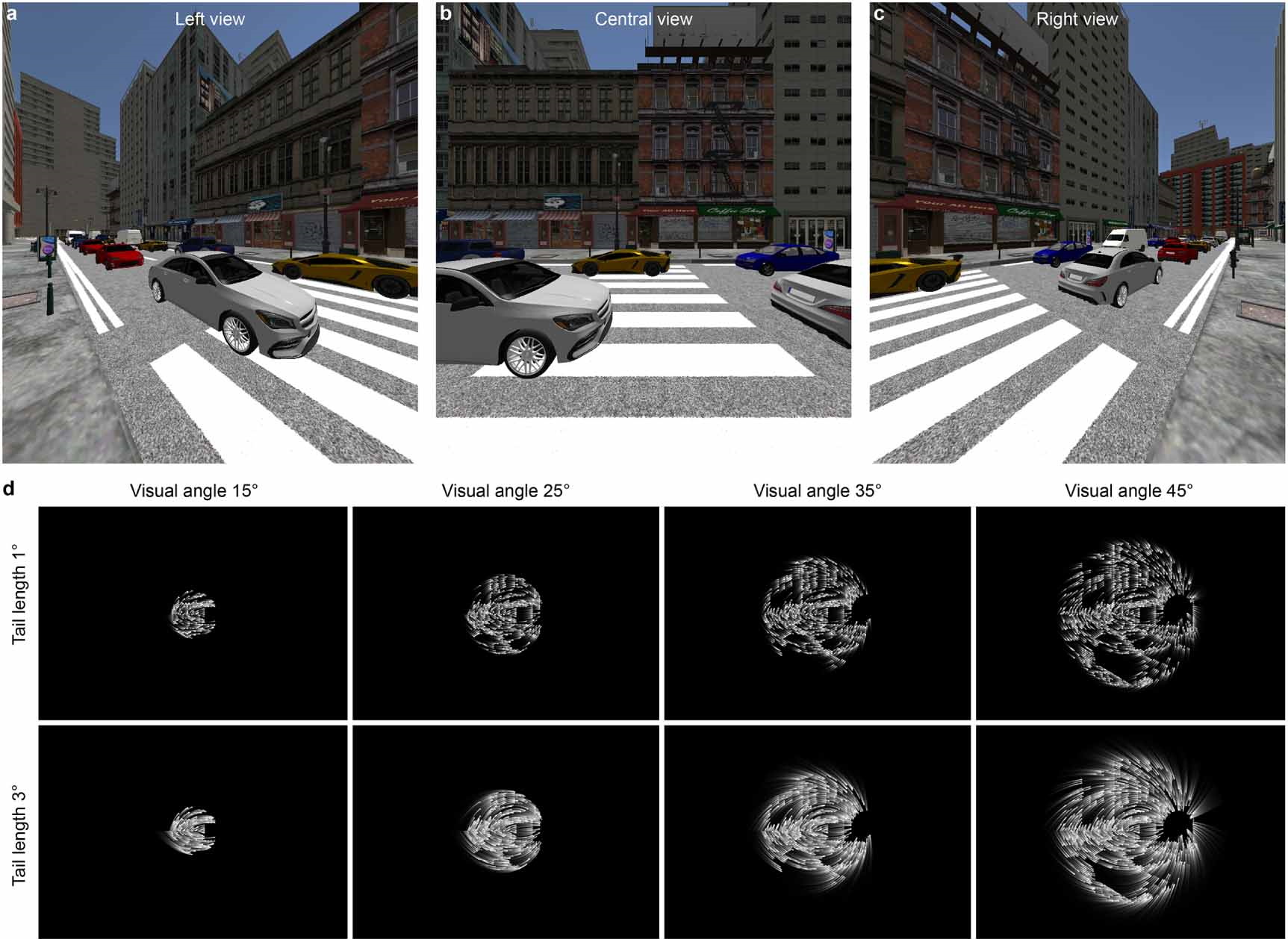

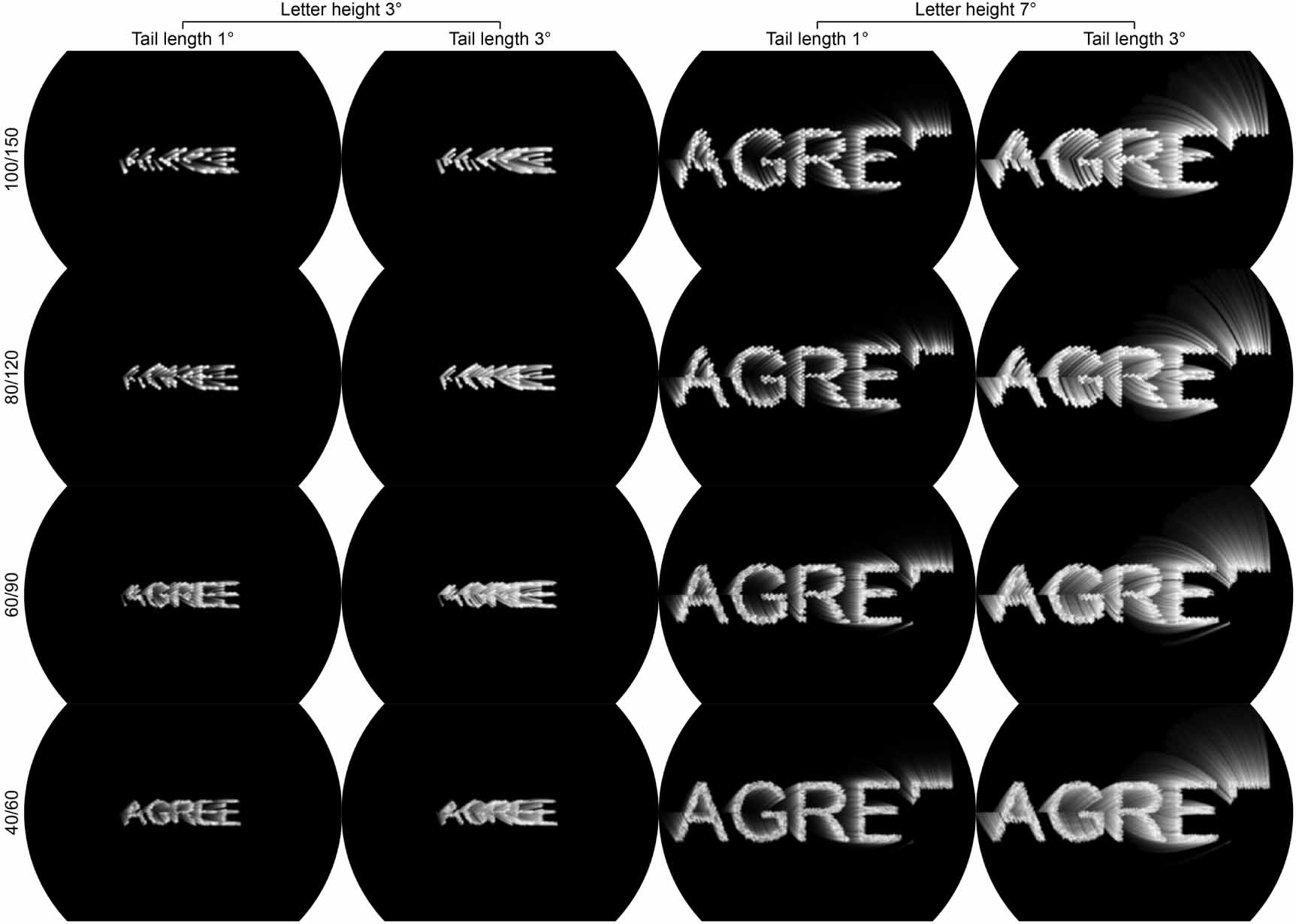

Because the team can’t exactly do a “test” installation of an experimental retinal implant on people to see if it works, they needed another way to tell whether the dimensions and resolution of the device would be sufficient for certain everyday tasks like recognizing objects and letters.

Image Credits: Jacob Thomas Thorn et al

To do this, they put people in VR environments that were dark except for little simulated “phosphors,” the pinpricks of light they expect to create by stimulating the retina via the implant; Ghezzi likened what people would see to a constellation of bright, shifting stars. They varied the number of phosphors, the area they appear over, and the length of their illumination or “tail” when the image shifted, asking participants how well they could perceive things like a word or scene.

Image Credits: Jacob Thomas Thorn et al

Their primary finding was that the most important factor was visual angle — the overall size of the area where the image appears. Even a clear image is difficult to understand if it only takes up the very center of your vision, so even if overall clarity suffers it’s better to have a wide field of vision. The robust analysis of the visual system in the brain intuits things like edges and motion even from sparse inputs.

This demonstration showed that the implant’s parameters are theoretically sound and the team can start working toward human trials. That’s not something that can happen in a hurry, and while this approach is very promising compared with earlier, wired ones, it will still be several years even in the best case scenario before it’s possible it could be made widely available. Still, the very prospect of a working retinal implant of this type is an exciting one and we’ll be following it closely.