Tech giants have gotten worse at removing illegal hate speech from their platforms under a voluntary arrangement in the European Union, according to the Commission’s latest assessment.

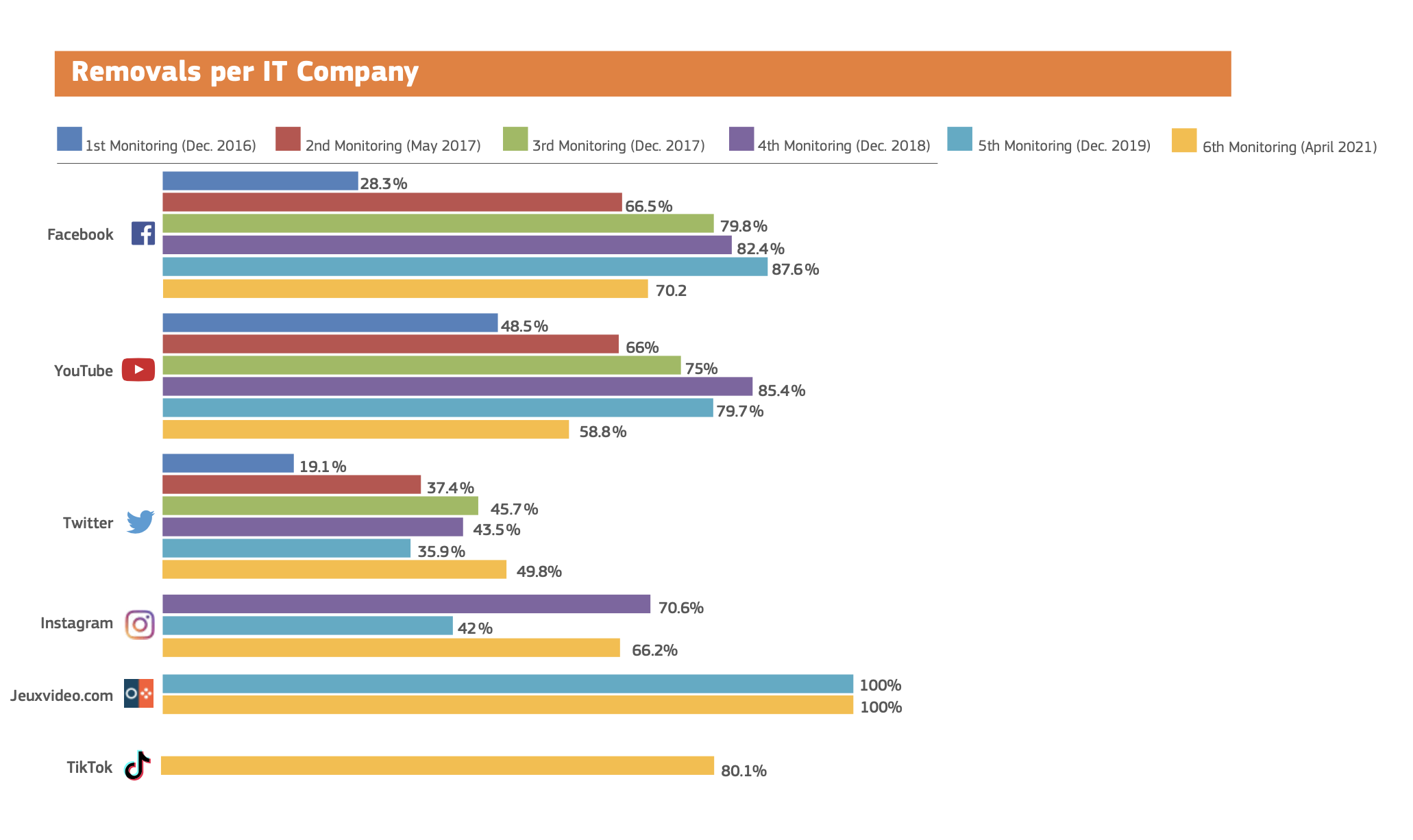

The sixth evaluation report of the EU’s Code of Conduct on removing illegal hate speech found what the bloc’s executive calls a “mixed picture”, with platforms reviewing 81% of the notifications within 24 hours and removing an average of 62.5% of flagged content.

These results are lower than the average recorded in both 2019 and 2020, the Commission notes.

The self-regulatory initiative kicked off back in 2016, when Facebook, Microsoft, Twitter and YouTube agreed to remove in less than 24 hours hate speech that falls foul of their community guidelines.

Since then Instagram, Google+, Snapchat, Dailymotion, Jeuxvideo.com, TikTok and LinkedIn have also signed up to the code.

While the headline promises were bold, the reality of how platforms have performed has often fallen short of what was pledged. And while there had been a trend of improving performance, that’s now stopped or stalled per the Commission — with Facebook and YouTube among the platforms performing worse than in earlier monitoring rounds.

Screengrab of a chart showing varying rates of removals per company, from a European Commission fact-sheet on the hate speech removals code (Image Credit: European Commission)

A key driver for the EU to establish the code five years ago was concern about the spread of terrorist content online, as lawmakers sought ways to quickly apply pressure to platforms to speed up the removal of hate-preaching content.

But the bloc now has a regulation for that: In April the EU adopted a law on terrorist content takedowns that set one hour as the default time for removals to be carried out.

EU lawmakers have also proposed a wide-ranging update to digital regulations that will expand requirements on platforms and digital services in a range of areas around their handling of illegal content and/or goods.

This Digital Services Act (DSA) has not yet been passed, so the self-regulatory code is still operational — for now.

The Commission said today that it wants to discuss the code’s evolution with signatories, including in light of the “upcoming obligations and the collaborative framework in the proposal for a Digital Services Act“. So whether the code gets retired entirely — or beefed up as a supplement to the incoming legal framework — remains to be seen.

On disinformation, where the EU also operates a voluntary code to squeeze the tech industry to combat the spread of harmful non-truthful content, the Commission has said it intends to keep obligations voluntary while simultaneously strengthening measures and linking compliance with it — at least for the largest platforms — to the legally binding DSA.

The stalled improvement in platforms’ hate speech removals under the voluntary code suggests the approach may have run its course. Or that platforms are taking their foot off the gas while they wait to see what specific legal requirements they will have.

The Commission notes that while some companies’ results “clearly worsened”, others “improved” over the monitored period. But such patchy results are perhaps a core limitation of a non-binding code.

EU lawmakers also flagged that “insufficient feedback” to users (via notifications) remains a “main weakness” of the code, as in previous monitoring rounds. So, again, legal force seems necessary — and the DSA proposes standardized rules for elements like reporting procedures.

Commenting on the latest report on the hate speech code in a statement, Věra Jourová, the Commission’s VP for values and transparency, looked ahead to the incoming regulation, saying: “Our unique Code has brought good results but the platforms cannot let the guard down and need to address the gaps. And gentlemen agreement alone will not suffice here. The Digital Services Act will provide strong regulatory tools to fight against illegal hate speech online.”

“The results show that IT companies cannot be complacent: just because the results were very good in the last years, they cannot take their task less seriously,” added Didier Reynders, commissioner for Justice, in another supporting statement. “They have to address any downward trend without delay. It is matter of protecting a democratic space and fundamental rights of all users. I trust that a swift adoption of the Digital Services Act will also help solving some of the persisting gaps, such as the insufficient transparency and feedback to users.”

Other findings from the illegal hate speech takedowns monitoring exercise include that:

- Removal rates varied depending on the severity of hateful content; 69% of content calling for murder or violence against specific groups was removed, while 55% of the content using defamatory words or pictures aiming at certain groups was removed. Conversely, in 2020, the respective results were 83.5% and 57.8%.

- IT companies gave feedback to 60.3% of the notifications received, which is lower than during the previous monitoring exercise (67.1%).

- In this monitoring exercise, sexual orientation is the most commonly reported ground of hate speech (18.2%) followed by xenophobia (18%) and anti-Gypsyism (12.5%).

The Commission also said that for the first time signatories reported “detailed information” about measures taken to counter hate speech outside the monitoring exercise, including actions to automatically detect and remove content.