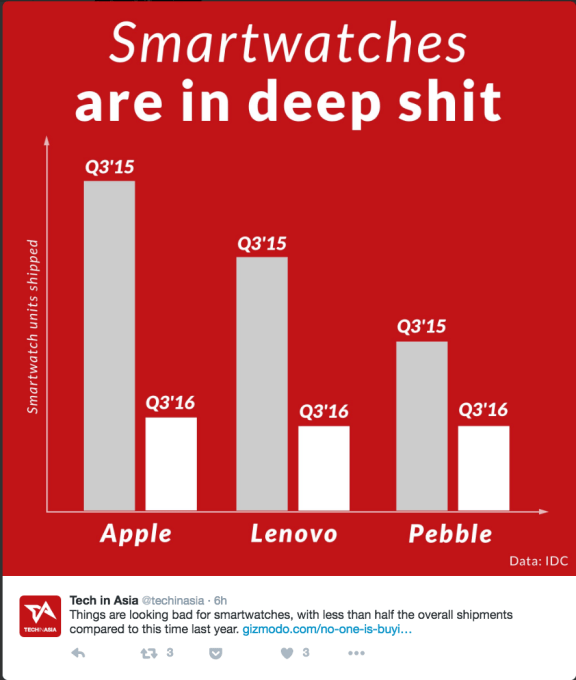

Even before smartwatches, I did not wear a watch. I did not buy an Apple Watch or Pebble, despite the cool factor, even though I always upgrade to the latest iPhone as soon as it comes out and the lines subside.

I don’t like the way watches feel on my wrist; more importantly, I don’t get real value from a watch. Unless you are in the Army like my son, where a G-Shock is the preferred timepiece, there are (and for a long time have been) clocks on the wall everywhere. And there are phones with the time and date in every pocket.

Needless to say, the graph below about the decline in smartwatch sales is no surprise to me.

I would also suggest that in order for an innovative new device to take off and become a platform, it actually needs to pioneer a new user interface that utilizes a different one of our limbs or senses.

The PC really took off with the GUI and the mouse. If you remember computers like the Commodore 64 before the mouse and the GUI, you know just how few of us there are who actually owned one and used one. The mouse put my hand in use and the PC took off.

The smartphone, which was the next platform, pioneered the touchscreen at scale. There is a big difference in experience and convenience between my finger swipe and a full-handed mouse. This enabled a new experience, which, when layered onto the always-on and always-on-me nature of the smartphone, created the next platform.

Each new level or era of technology also extends human geographic freedom.

Apple’s iPod and its click wheel was actually the first indicator that the finger was another limb and a radically different UI than the mouse. The touchscreen built on that innovation. In contradistinction, the watch has the same finger-touchscreen interface. It does not tickle a new human sense, nor use a different limb. Hence, it does not create enough innovation to drive new applications, uses and, ultimately, a platform.

There is another dimension though, as Professor David Passig has pointed out (before me) — each new level or era of technology also extends human geographic freedom. The smartphone has certainly done this, as we can now do everything on the go; the internet did it before through remote information and services; and, before that, the PC expanded geographic freedom, as well, untethering people from the need to finish their work at school or the office.

It is through the combination of these two frameworks that I think about the next generation of technology and areas of computing and applications in which I am hoping to invest over the coming years. I would suggest that when you look at the two platforms competing to be the next platform for the information revolution, this framework is useful.

Facebook bought Oculus, essentially betting that VR is the next platform for computing. VR certainly makes use of another human sense: sight. It has a different user interface, my eyeballs, and a headset (or even perhaps my brain interpreting what I am seeing). However, I think VR fails the second test right now (and for the foreseeable future). It does not expand human geographic freedom. It actually constrains it. It is a sitting experience and it only virtually expands my geographic freedom. Virtual freedom is an escape — it is not actual geographic freedom.

I think this, however, points us to where the real opportunity is: My ears, and, by extension, my mouth. I think Amazon and Apple are onto something with Alexa and the wireless AirPods, respectively. Apple, the creator of the smartphone touch interface revolution, has intuited that Bluetooth, sensors, a wireless chip and other basics of smartphone computing can be packed into my ears. Amazon, who whiffed on the Fire, has decided to leapfrog a generation and move to voice.

Interestingly, while Apple (I think) got there by moving the device from my hands to my ears, Amazon (I think) got there because shopping is now an always-on experience and they want me to use my mouth to shout at Alexa whenever I discover that I am missing a product. Hence, the Alexa stands as virtual ears while the human uses his mouth while shopping to fill his refrigerator, and Apple uses my human ears to also free my hands and my sound-emitting mouth is a byproduct.

Both Apple and Amazon, from whichever direction they approach the innovation, are using another limb: my ears. And by using voice as a command or interface, in proximity or over long distances, we make yet another meaningful extension of human freedom. Particularly, when we consider the fact that it is also a hands-free interface.

By freeing my hands, we can enable innovation that uses voice, sound and untethered hands in ways we cannot imagine yet. Imagine what human ingenuity could bring forward if we took our teenagers’ hands off their phones?

Interestingly, this same observation about voice is likely what is driving Google’s Pixel phone. Google is not after the phone but rather driving, enhancing and expanding use of its voice-powered assistant. We have heard from sources that 25 percent of the responses on Inbox are now smart-replies, which is quite incredible. This is in line with Google making sure to own the software and network layer of the interface of the future. That, in their view, is clearly voice.

I am very excited about voice and voice applications, as well as in-ear wireless computing. I think it has another benefit, as well. By unshackling us from the little screens in our hands, it raises Homo sapiens’ currently-craned heads back to eye level. Then, we may also start talking to each other — and not just Alexa.

Featured Image: Vstock/Getty Images