The graphical fidelity of games these days is truly astounding, but one thing their creators struggle to portray is the variety and fluidity of human motion. An animation system powered by a neural network drawing from real motion-captured data may help make our avatars walk, run and jump a little more naturally.

Of course, if you’ve played any modern game, you may find that many do already — but this is painstaking work accomplished by animators working from libraries of motions, linking together all kinds of contingencies — what if she raises her bow as she’s going up stairs and crouching? What if she gets hit while trying to balance on a narrow beam? The possibilities are quite endless.

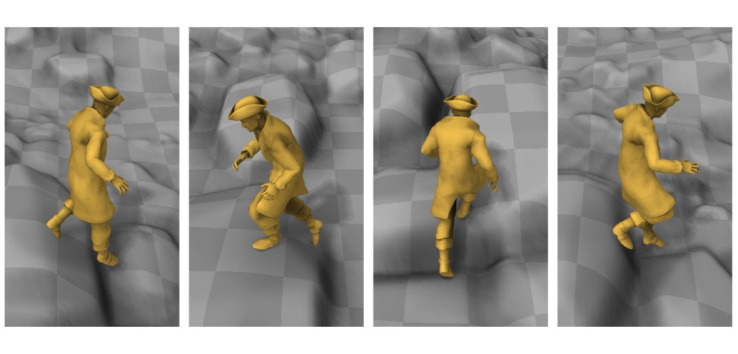

Researchers from the University of Edinburgh and Method Studios put together a machine learning system that feeds on motion capture clips showing various kinds of movement. Then, when given an input such as a user saying “go this way” and taking into account the terrain, it outputs the animation that best fits both — for example, going from a jog to hopping over a small obstacle.

No custom animation has to be made transitioning from a jog to a hop; the algorithm determines that, producing smooth movements and no jarring switches from one animation type to another. Although plenty of game engines do improvise a little for things like foot placement and blend animations, this is a new approach that could prove more robust.

No custom animation has to be made transitioning from a jog to a hop; the algorithm determines that, producing smooth movements and no jarring switches from one animation type to another. Although plenty of game engines do improvise a little for things like foot placement and blend animations, this is a new approach that could prove more robust.

Machine learning has been brought to this space before, but as the team shows in the video, the systems produced were pretty rudimentary, showing the wrong type of movement or skipping animations because they weren’t sure which to use — or deciding too strongly for an animation state and producing jittery motion.

To avoid this, the researchers add a phase function to the neural network that essentially prevents it from wrongly mixing different animation types — for instance, taking a step in the middle of a jump.

“Since our method is data-driven, the character doesn’t simply play back a jump animation, it adjusts its movements continuously based on the height of the obstacle,” the researchers say in a video describing the new method.

You couldn’t put this into a game as-is, of course, but it could be the start of a more intelligent method of blending and creating animations. That could mean less grunt work for animators and more natural-looking movements for characters we play as. Now there’s just the question of how to do this for aliens, spiders and other creatures that you don’t often find in a mocap studio.

Daniel Holden, Taku Komura and Jun Saito (the latter of Method Studios) will be presenting their work at this year’s SIGGRAPH. You can read more about it at Holden’s webpage.