It’s suspiciously convenient that Facebook already fulfills most of the regulatory requirements it’s asking governments to lay on the rest of the tech industry. Facebook CEO Mark Zuckerberg is in Brussels lobbying the European Union’s regulators as they form new laws to govern artificial intelligence, content moderation and more. But if they follow Facebook’s suggestions, they might reinforce the social network’s power rather than keep it in check by hamstringing companies with fewer resources.

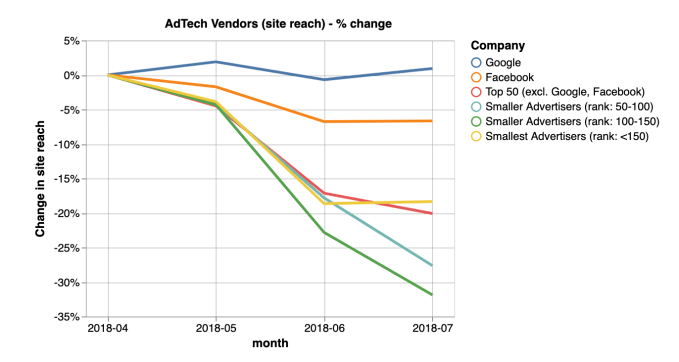

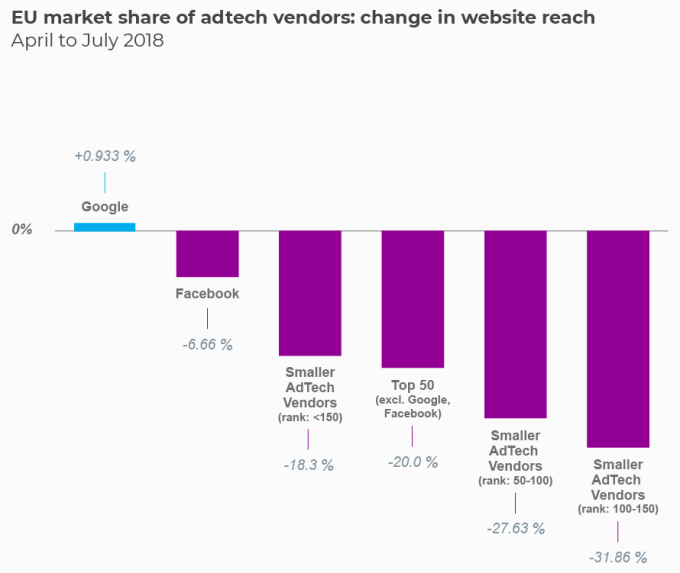

We already saw this happen with GDPR. The idea was to strengthen privacy and weaken exploitative data collection that tech giants like Facebook and Google depend on for their business models. The result was that Facebook and Google actually gained or only slightly lost EU market share while all other adtech vendors got wrecked by the regulation, according to WhoTracksMe.

GDPR went into effect in May 2018, hurting other adtech vendors’ EU market share much worse than Google and Facebook. Image credit: WhoTracksMe

Tech giants like Facebook have the profits lawyers, lobbyists, engineers, designers, scale and steady cash flow to navigate regulatory changes. Unless new laws are squarely targeted at the abuses or dominance of these large companies, their collateral damage can loom large. Rather than spend time and money they don’t have in order to comply, some smaller competitors will fold, scale back or sell out.

But at least in the case of GDPR, everyone had to add new transparency and opt out features. If Facebook’s slate of requests goes through, it will sail forward largely unperturbed while rivals and upstarts scramble to get up to speed. I made this argument in March 2018 in my post “Regulation could protect Facebook, not punish it.” Then GDPR did exactly that.

Google gained market share and Facebook only lost a little in the EU following GDPR. Everyone else fared worse. Image via WhoTracksMe

That doesn’t mean these safeguards aren’t sensible for everyone to follow. But regulators need to consider what Facebook isn’t suggesting if it wants to address its scope and brazenness, and what timelines or penalties would be feasible for smaller players.

If we take a quick look at what Facebook is proposing, it becomes obvious that it’s self-servingly suggesting what it’s already accomplished:

- User-friendly channels for reporting content – Every post and entity on Facebook can already be flagged by users with an explanation of why

- External oversight of policies or enforcement – Facebook is finalizing its independent Oversight Board right now

- Periodic public reporting of enforcement data – Facebook publishes a twice-yearly report about enforcement of its Community Standards

- Publishing their content standards – Facebook publishes its standards and notes updates to them

- Consulting with stakeholders when making significant changes – Facebook consults a Safety Advisory Board and will have its new Oversight Board

- Creating a channel for users to appeal a company’s content removal decisions – Facebook’s Oversight Board will review content removal appeals

- Incentives to meet specific targets such as keeping the prevalence of violating content below some agreed threshold – Facebook already touts how 99% of child nudity content and 80% of hate speech removed was detected proactively, and that it deletes 99% of ISIS and Al Qaeda content

Facebook CEO Mark Zuckerberg at the European Union headquarters in Brussels, May 22, 2018. (Photo credit: JOHN THYS/AFP/Getty Images)

Finally, Facebook asks that the rules for what content should be prohibited on the internet “recognize user preferences and the variation among internet services, can be enforced at scale, and allow for flexibility across language, trends and context.” That’s a lot of leeway. Facebook already allows different content in different geographies to comply with local laws, lets Groups self-police themselves more than the News Feed and Zuckerberg has voiced support for customizable filters on objectionable content with defaults set by local majorities.

“…Can be enforced at scale” is a last push for laws that wouldn’t require tons of human moderators to enforce what might further drag down Facebook’s share price. “100 billion piece of content come in per day, so don’t make us look at it all.” Investments in safety for elections, content, and cybersecurity already dragged Facebook’s profits down from growth of 61% year-over-year in 2019 to just 7% in 2019.

To be clear, it’s great that Facebook is doing any of this already. Little is formally required. If the company was as evil as some make it out to be, it wouldn’t be doing any of this.

Then again, Facebook earned $18 billion in profit in 2019 off our data while repeatedly proving it hasn’t adequately protected it. The $5 billion fine and settlement with the FTC where Facebook has pledged to build more around privacy and transparency shows it’s still playing catch-up given its role as a ubiquitous communications utility.

There’s plenty more for EU and hopefully U.S. regulators to investigate. Should Facebook pay a tax on the use of AI? How does it treat and pay its human content moderators? Would requiring users to be allowed to export their interoperable friends list promote much-needed competition in social networking that could let the market compel Facebook to act better?

As the EU internal market commissioner Thierry Breton told reporters following Zuckerberg’s meetings with regulators, “It’s not for us to adapt to those companies, but for them to adapt to us.”