Facebook this morning announced a new feature for Messenger designed to cut down on malicious parties looking to scam users. The company scans accounts for suspicious activity, leveraging machine learning to pick up anomalies like accounts sending a large number of requests in a short time span or numerous message requests to users under 18. The feature arrives amid a notable uptick in false friend requests caused by a change to the service’s search algorithm.

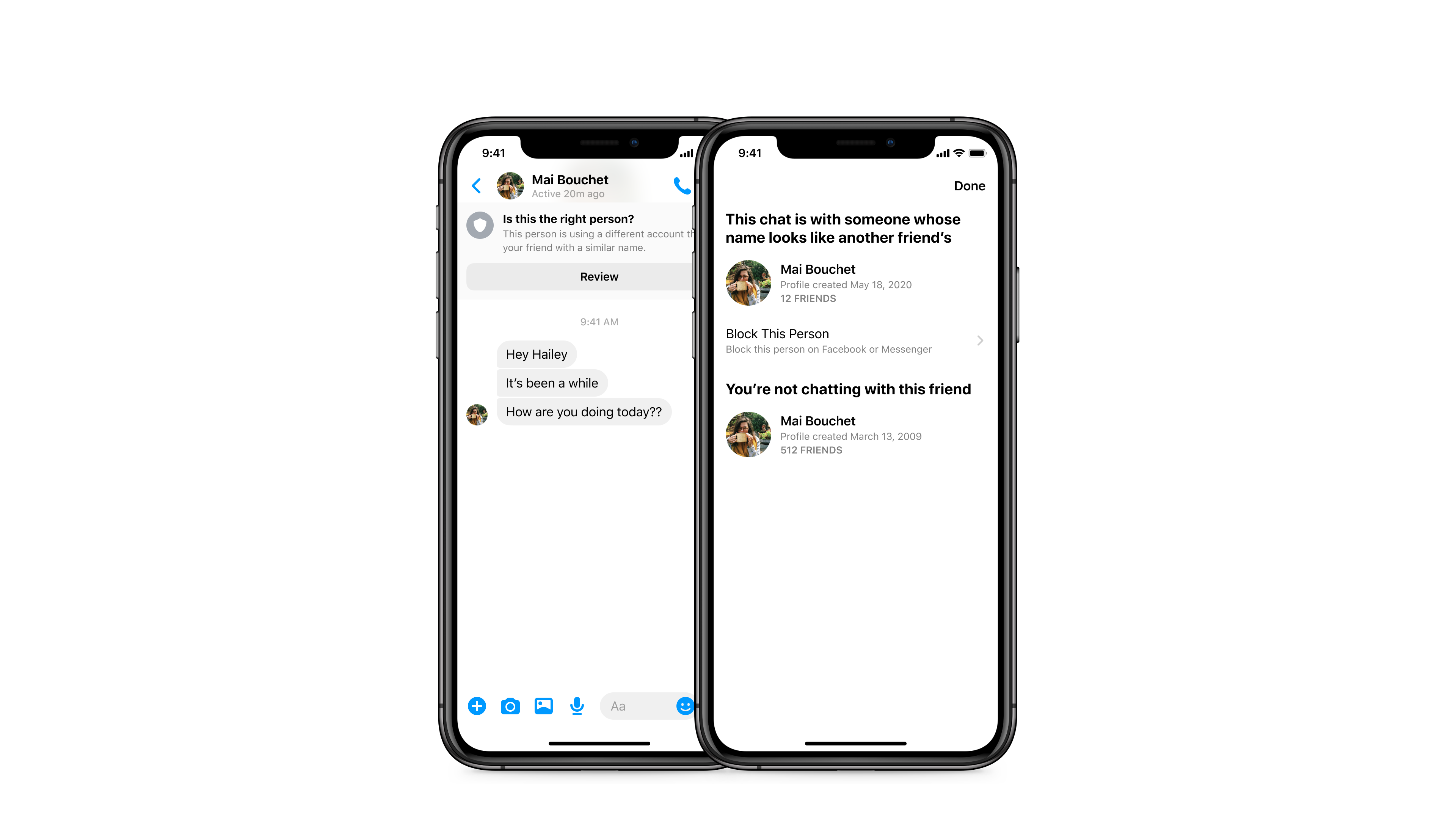

If suspicious activity is detected, the app will pop up a chat window noting the issue, along with options for blocking or ignoring the user. The system has already seen a limited roll out for some Android users, dating as far back as March; iOS functionality, meanwhile, is set to arrive some time next week.

The feature aims to both cut down on scammers and users posing as other people, along with helping to protect minors from bad actors. The system is designed to limit interactions between adults and younger users who aren’t already connected on the platform. Per Facebook, “Our new feature educates people under the age of 18 to be cautious when interacting with an adult they may not know and empowers them to take action before responding to a message.”

Facebook says the feature will continue to work with the addition of end-to-end encryption on the platform, which is likely where that machine learning comes in, keeping human operators from having to view potentially sensitive information.