Facebook must exert constant vigilance to prevent its platform from being taken over by ne’er-do-wells, but how exactly it does that is only really known to itself. Today, however, the company has graced us with a bit of data on what tools it’s using and what results they’re getting — for instance, more than 14 million pieces of “terrorist content” removed this year so far.

More than half of that 14 million was old content posted before 2018, some of which had been sitting around for years. But as Facebook points out, that content may very well have also been unviewed that whole time. It’s hard to imagine a terrorist recruitment post going unreported for 970 days (the median age for content in Q1) if it was seeing any kind of traffic.

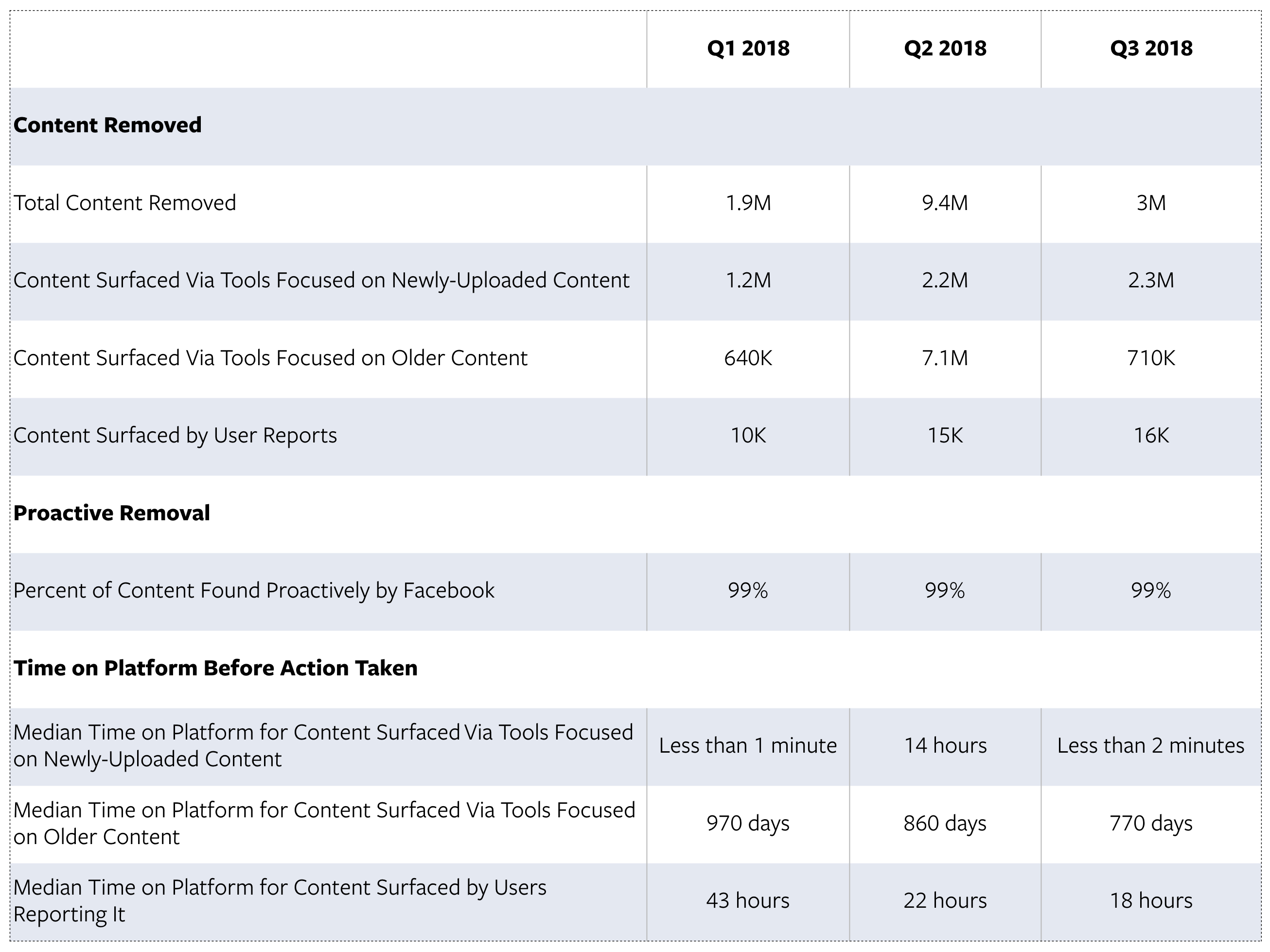

Perhaps more importantly, the numbers of newer content removed (with, to Facebook’s credit, a quickly shrinking delay) appear to be growing steadily. In Q1, 1.2 million items were removed; in Q2, 2.2 million; in Q3, 2.3 million. User-reported content removals are growing as well, though they are much smaller in number — around 16,000 in Q3. Indeed, 99 percent of it, Facebook proudly reports, is removed “proactively.”

Something worth noting: Facebook is careful to avoid positive or additive verbs when talking about this content, for instance it won’t say that “terrorists posted 2.3 million pieces of content,” but rather that was the number of “takedowns” or content “surfaced.” This type of phrasing is more conservative and technically correct, as they can really only be sure of their own actions, but it also serves to soften the fact that terrorists are posting hundreds of thousands of items monthly.

The numbers are hard to contextualize. Is this a lot or a little? Both, really. The amount of content posted to Facebook is so vast that almost any number looks small next to it, even a scary one like 14 million pieces of terrorist propaganda.

It is impressive, however, to hear that Facebook has greatly expanded the scope of its automated detection tools:

Our experiments to algorithmically identify violating text posts (what we refer to as “language understanding”) now work across 19 languages.

And it fixed a bug that was massively slowing down content removal:

In Q2 2018, the median time on platform for newly uploaded content surfaced with our standard tools was about 14 hours, a significant increase from Q1 2018, when the median time was less than 1 minute. The increase was prompted by multiple factors, including fixing a bug that prevented us from removing some content that violated our policies, and rolling out new detection and enforcement systems.

The Q3 number is two minutes. It’s a work in progress.

No doubt we all wish the company had applied this level of rigor somewhat earlier, but it’s good to know that the work is being done. Notable is that a great deal of this machinery is not focused on simply removing content, but on putting it in front of the constantly growing moderation team. So the most important bit is still, thankfully and heroically, done by people.