It takes an immense amount of processing power to create and operate the “AI” features we all use so often, from playlist generation to voice recognition. Lightmatter is a startup that is looking to change the way all that computation is done — and not in a small way. The company makes photonic chips that essentially perform calculations at the speed of light, leaving transistors in the dust. It just closed an $11 million Series A.

The claim may sound grandiose, but the team and the tech definitely check out. Nick Harris, Lightmatter’s CEO, wrote his thesis on this stuff at MIT, and has published in major journals like Nature Photonics several papers showing the feasibility of the photonic computing architecture.

So what exactly does Lightmatter’s hardware do?

At the base of all that AI and machine learning is, like most computing operations, a lot of math (hence the name computing). A general-purpose computer can do any of that math, but for complex problems it has to break it down into a series of smaller ones and perform them sequentially.

One such complex type of math problem common in AI applications is a matrix vector product. Doing these quickly is important for comparing large sets of data with one another, for instance if a voice recognition system wants to see if a certain sound wave is sufficiently similar to “OK Google” to initiate a response.

The problem is that as demand increases for AI-based products, these calculations need to be done more and faster, but we’re reaching the limits of just how quickly and efficiently they can be accomplished and relayed back to the user. So while the computing technology that has existed for decades isn’t going anywhere, for certain niches there are tantalizing options on the horizon.

“One of the symptoms of Moore’s Law dying is that companies like Intel are investing in quantum and other stuff — basically anything that’s not traditional computing,” Harris told me in an interview. “Now is a great time to look at alternative architectures.”

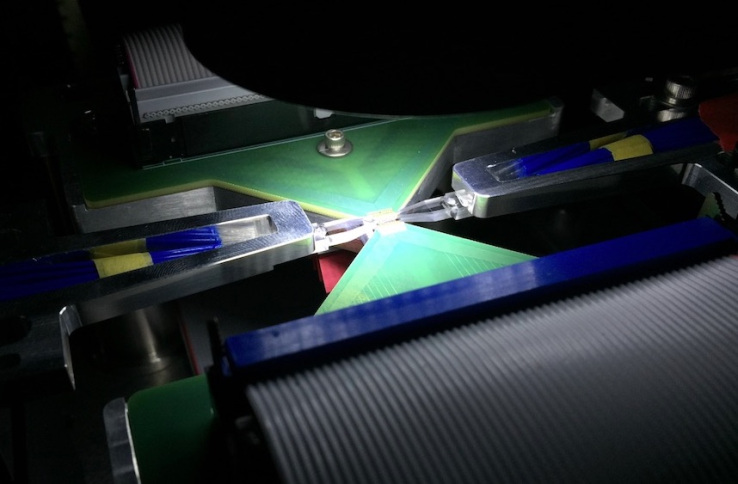

Instead of breaking that matrix calculation down to a series of basic operations with cascades of logic gates and transistors, Lightmatter’s photonic chips essentially solve the entire problem at once by running a beam of light through a gauntlet of tiny, configurable lenses (if that’s the right word at this scale) and sensors. By creating and tracking tiny changes in the phase or path of the light, the solution is found as fast as the light can get from one end of the chip to the other. Not only does this mean results come back nearly instantly, but it only uses a fraction of the power of traditional chips.

Instead of breaking that matrix calculation down to a series of basic operations with cascades of logic gates and transistors, Lightmatter’s photonic chips essentially solve the entire problem at once by running a beam of light through a gauntlet of tiny, configurable lenses (if that’s the right word at this scale) and sensors. By creating and tracking tiny changes in the phase or path of the light, the solution is found as fast as the light can get from one end of the chip to the other. Not only does this mean results come back nearly instantly, but it only uses a fraction of the power of traditional chips.

“A lot of deep learning relies on this specific operation that our chip can accelerate,” explained Harris. “It’s a special case where a special purpose optical computer can shine. This is the first photonic chip that can do that, accurately and in a scalable way.”

And not by 20 or 30 percent — we’re talking orders of magnitude here.

The company is built out of research Harris and colleagues began at MIT, which owns some of the patents relating to Lightmatter’s tech and licenses it to them. They created a prototype chip with 32 “neurons,” the sort of calculational building block of this type of photonics. Now the company is well on its way to creating one with hundreds.

![]() “In speed, power, and latency we’re pretty close to what you can theoretically do,” Harris said. That is to say, you can’t make light go any faster. But just like with traditional computers, you can make the chips denser, have them work in parallel, improve the sensors and so on.

“In speed, power, and latency we’re pretty close to what you can theoretically do,” Harris said. That is to say, you can’t make light go any faster. But just like with traditional computers, you can make the chips denser, have them work in parallel, improve the sensors and so on.

You wouldn’t have one of these things in your home. Lightmatter chips would be found in specialty hardware used by hardcore AI developers. Maybe Google would buy a few dozen and use them to train stuff internally, or Amazon might make them available by the quarter second for quick-turnaround ML jobs.

The $11 million Series A round the company just announced, led by Matrix and Spark, is intended to help build the team that will take the technology from prototype to product.

“This isn’t a science project,” said Matrix’s Stan Reiss, lest you think this is just a couple of students on a wild technology goose chase. “This is the first application of optical computing in a very controlled manner.”

Competitors, he noted, are focused on squeezing every drop of performance out of semi-specialized hardware like GPUs, making AI-specific boards that outperform stock hardware but ultimately are still traditional computers with lots of tweaks.

“Anyone can build a chip that works like that, the problem is they’ll have a lot of competition,” he said. “This is the one company that’s totally orthogonal to that. It’s a different engine.”

And it has only recently become possible, they both pointed out. Investment in basic research and the infrastructure behind building photonic chips over the last decade has paid off, and it’s finally gotten to the point where the technology can break out of the lab. (Lightmatter’s tech works with existing CMOS-based fabrication methods, so no need to spend hundreds of millions on a new fab.)

“AI is really in its infancy,” as Harris put it in the press release announcing the investment, “and to move forward, new enabling technologies are required. At Lightmatter, we are augmenting electronic computers with photonics to power a fundamentally new kind of computer that is efficient enough to propel the next generation of AI.”