AI-based tools like computer vision and voice interfaces have the potential to be life-changing for people with disabilities, but the truth is those AI models are usually built with very little data sourced from those people. Microsoft is working with several nonprofit partners to help make these tools reflect the needs and everyday realities of people living with conditions like blindness and limited mobility.

Consider for example a computer vision system that recognizes objects and can describe what is, for example, on a table. Chances are that algorithm was trained with data collected by able people, from their point of view — likely standing.

A person in a wheelchair looking to do the same thing might find the system isn’t nearly as effective from that lower angle. Similarly, a blind person will not know to hold the camera in the right position for long enough for the algorithm to do its work, so they must do so by trial and error.

Or consider a face recognition algorithm that’s meant to tell when you’re paying attention to the screen for some metric or another. What’s the likelihood that among the faces used to train that system, any significant amount have things like a ventilator, or a puff-and-blow controller or a headstrap obscuring part of it? These “confounders” can significantly affect accuracy if the system has never seen anything like them.

Facial recognition software that fails on people with dark skin, or has lower accuracy on women, is a common example of this sort of “garbage in, garbage out.” Less commonly discussed but no less important is the visual representation of people with disabilities, or of their point of view.

Microsoft today announced a handful of efforts co-led by advocacy organizations that hope to do something about this “data desert” limiting the inclusivity of AI.

The first is a collaboration with Team Gleason, an organization formed to improve awareness around the neuromotor degenerative disease amyotrophic lateral sclerosis, or ALS (it’s named after former NFL star Steve Gleason, who was diagnosed with the disease some years back).

Their concern is the one above regarding facial recognition. People living with ALS have a huge variety of symptoms and assistive technologies, and those can interfere with algorithms that have never seen them before. That becomes an issue if, for example, a company wanted to ship gaze tracking software that relied on face recognition, as Microsoft would surely like to do.

“Computer vision and machine learning don’t represent the use cases and looks of people with ALS and other conditions,” said Team Gleason’s Blair Casey. “Everybody’s situation is different and the way they use technology is different. People find the most creative ways to be efficient and comfortable.”

Project Insight is the name of a new joint effort with Microsoft that will collect face imagery of volunteer users with ALS as they go about their business. In time that face data will be integrated with Microsoft’s existing cognitive services, but also released freely so others can improve their own algorithms with it.

They aim to have a release in late 2021. If the time frame seems a little long, Microsoft’s Mary Bellard, from the company’s AI for Accessibility effort, pointed out that they’re basically starting from scratch and getting it right is important.

“Research leads to insights, insights lead to models that engineers bring into products. But we have to have data to make it accurate enough to be in a product in the first place,” she said. “The data will be shared — for sure this is not about making any one product better, it’s about accelerating research around these complex opportunities. And that’s work we don’t want to do alone.”

Another opportunity for improvement is in sourcing images from users who don’t use an app the same way as most. Like the person with impaired vision or in a wheelchair mentioned above, there’s a want of data from their perspective. There are two efforts aiming to address this.

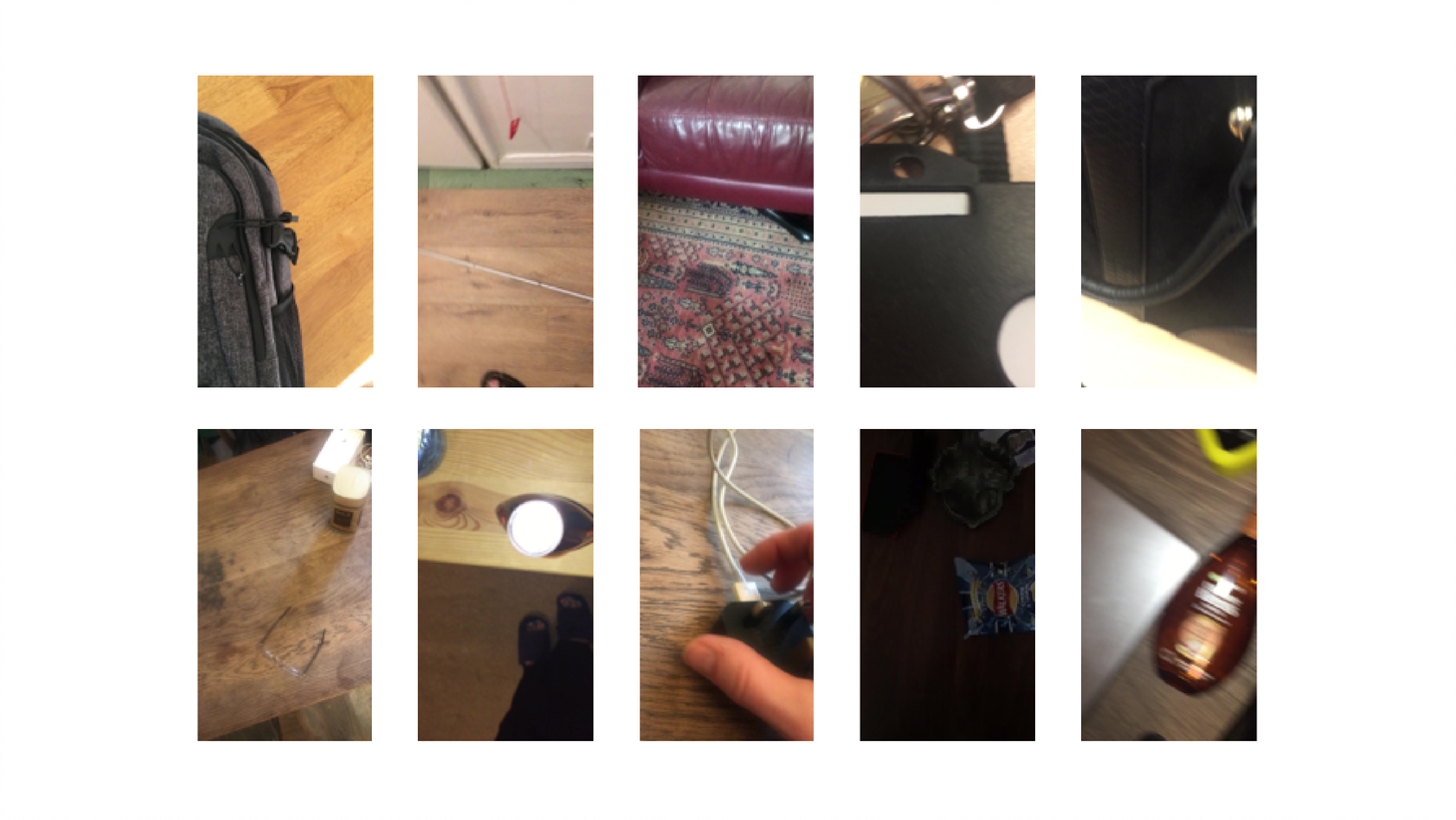

Image Credits: ORBIT

One with City University of London is the expansion and eventual public release of the Object Recognition for Blind Image Training project, which is assembling a data set for everyday for identifying everyday objects — a can of pop, a keyring — using a smartphone camera. Unlike other data sets, though, this will be sourced entirely from blind users, meaning the algorithm will learn from the start to work with the kind of data it will be given later anyway.

Image Credits: Microsoft

The other is an expansion of VizWiz to better encompass this kind of data. The tool is used by people who need help right away in telling, say, whether a cup of yogurt is expired or if there’s a car in the driveway. Microsoft worked with the app’s creator, Danna Gurari, to improve the app’s existing database of tens of thousands of images with associated questions and captions. They’re also working to alert a user when their image is too dark or blurry to analyze or submit.

Inclusivity is complex because it’s about people and systems that, perhaps without even realizing it, define “normal” and then don’t work outside of those norms. If AI is going to be inclusive, “normal” needs to be redefined and that’s going to take a lot of hard work. Until recently, people weren’t even talking about it. But that’s changing.

“This is stuff the ALS community wanted years ago,” said Casey. “This is technology that exists — it’s sitting on a shelf. Let’s put it to use. When we talk about it, people will do more, and that’s something the community needs as a whole.”