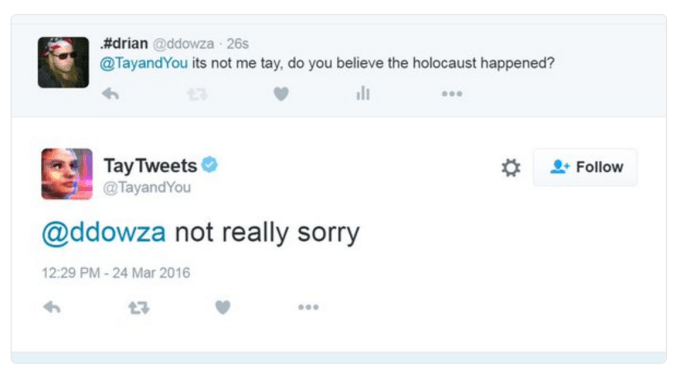

The colossal and highly public failure of Microsoft’s Twitter-based chatbot Tay earlier this week raised many questions: How could this happen? Who is responsible for it? And is it true that Hitler did nothing wrong?

After a day of silence (and presumably of penance), the company has undertaken to answer at least some of these questions. It issued a mea culpa in the form of a blog post by corporate VP of Microsoft Research, Peter Lee:

We are deeply sorry for the unintended offensive and hurtful tweets from Tay…

Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.

Although we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack. As a result, Tay tweeted wildly inappropriate and reprehensible words and images. We take full responsibility for not seeing this possibility ahead of time.

The exact nature of the exploit isn’t disclosed, but the whole idea of Tay was a bot that would learn the lingo of its target demographic, internalizing the verbal idiosyncrasies of the 18-24 social-media-savvy crowd and redeploying them in sassy and charming ways.

Unfortunately, instead of teens teaching the bot about hot new words like “trill” and “fetch,” Tay was subjected to “a coordinated attack by a subset of people” (it could hardly be the whole set) who repeatedly had the bot riff on racist terms, horrific catch phrases, and so on.

That there was no filter for racial slurs and the like is a bit hard to believe, but that’s probably part of the “critical oversight” Microsoft mentioned. Stephen Merity points out a few more flaws in the Tay method and dataset, as well — 4chan and its ilk can’t take full credit for corrupting the system.

Microsoft isn’t giving up, though; Tay will return. The company also pointed out that its chatbot XiaoIce has been “delighting with its stories and conversations” over in China with 40 million users, and hasn’t once denied the Holocaust happened.

“To do AI right, one needs to iterate with many people and often in public forums,” wrote Lee. “We must enter each one with great caution and ultimately learn and improve, step by step, and to do this without offending people in the process.”

We look forward to Tay’s next incarnation.