Robots have a hard time improvising, and encountering an unusual surface or obstacle usually means an abrupt stop or hard fall. But researchers have created a new model for robotic locomotion that adapts in real time to any terrain it encounters, changing its gait on the fly to keep trucking when it hits sand, rocks, stairs and other sudden changes.

Although robotic movement can be versatile and exact, and robots can “learn” to climb steps, cross broken terrain and so on, these behaviors are more like individual trained skills that the robot switches between. Although robots like Spot famously can spring back from being pushed or kicked, the system is really just working to correct a physical anomaly while pursuing an unchanged policy of walking. There are some adaptive movement models, but some are very specific (for instance this one based on real insect movements) and others take long enough to work that the robot will certainly have fallen by the time they take effect.

The team, from Facebook AI, UC Berkeley and Carnegie Mellon University, call it Rapid Motor Adaptation. It came from the fact that humans and other animals are able to quickly, effectively and unconsciously change the way they walk to fit different circumstances.

“Say you learn to walk and for the first time you go to the beach. Your foot sinks in, and to pull it out you have to apply more force. It feels weird, but in a few steps you’ll be walking naturally just as you do on hard ground. What’s the secret there?” asked senior researcher Jitendra Malik, who is affiliated with Facebook AI and UC Berkeley.

Certainly if you’ve never encountered a beach before, but even later in life when you have, you aren’t entering some special “sand mode” that lets you walk on soft surfaces. The way you change your movement happens automatically and without any real understanding of the external environment.

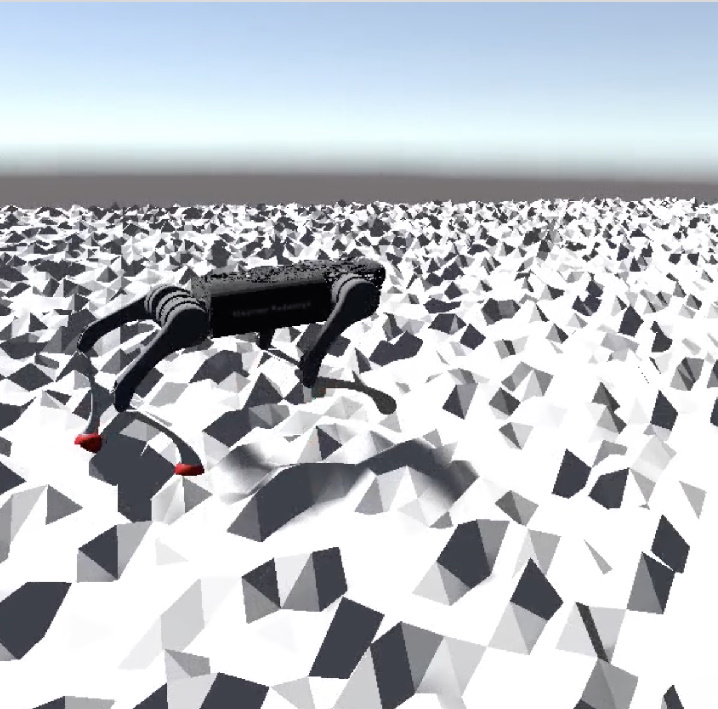

Visualization of the simulation environment. Of course the robot would not perceive any of this visually. Image Credits: Berkeley AI Research, Facebook AI Research and CMU

“What’s happening is your body responds to the differing physical conditions by sensing the differing consequences of those conditions on the body itself,” Malik explained — and the RMA system works in similar fashion. “When we walk in new conditions, in a very short time, half a second or less, we have made enough measurements that we are estimating what these conditions are, and we modify the walking policy.”

The system was trained entirely in simulation, in a virtual version of the real world where the robot’s small brain (everything runs locally on the on-board limited compute unit) learned to maximize forward motion with minimum energy and avoid falling by immediately observing and responding to data coming in from its (virtual) joints, accelerometers and other physical sensors.

To punctuate the total internality of the RMA approach, Malik notes that the robot uses no visual input whatsoever. But people and animals with no vision can walk just fine, so why shouldn’t a robot? But since it’s impossible to estimate the “externalities” such as the exact friction coefficient of the sand or rocks it’s walking on, it simply keeps a close eye on itself.

“We do not learn about sand, we learn about feet sinking,” said co-author Ashish Kumar, also from Berkeley.

Ultimately the system ends up having two parts: a main, always-running algorithm actually controlling the robot’s gait, and an adaptive algorithm running in parallel that monitors changes to the robot’s internal readings. When significant changes are detected, it analyzes them — the legs should be doing this, but they’re doing this, which means the situation is like this — and tells the main model how to adjust itself. From then on the robot only thinks in terms of how to move forward under these new conditions, effectively improvising a specialized gait.

Image Credits: Berkeley AI Research, Facebook AI Research and CMU

After training in simulation, it succeeded handsomely in the real world, as the news release describes it:

The robot was able to walk on sand, mud, hiking trails, tall grass and a dirt pile without a single failure in all our trials. The robot successfully walked down stairs along a hiking trail in 70% of the trials. It successfully navigated a cement pile and a pile of pebbles in 80% of the trials despite never seeing the unstable or sinking ground, obstructive vegetation or stairs during training. It also maintained its height with a high success rate when moving with a 12 kg payload that amounted to 100% of its body weight.

You can see examples of many of these situations in videos here or (very briefly) in the gif above.

Malik gave a nod to the research of NYU professor Karen Adolph, whose work has shown how adaptable and free-form the human process of learning how to walk is. The team’s instinct was that if you want a robot that can handle any situation, it has to learn adaptation from scratch, not have a variety of modes to choose from.

Just as you can’t build a smarter computer-vision system by exhaustively labeling and documenting every object and interaction (there will always be more), you can’t prepare a robot for a diverse and complex physical world with 10, 100, even thousands of special parameters for walking on gravel, mud, rubble, wet wood, etc. For that matter you may not even want to specify anything at all beyond the general idea of forward motion.

“We don’t pre-program the idea that it has for legs, or anything about the morphology of the robot,” said Kumar.

This means the basis of the system — not the fully trained one, which ultimately did mold itself to quadrupedal gaits — can potentially be applied not just to other legged robots, but entirely different domains of AI and robotics.

“The legs of a robot are similar to the fingers of a hand; the way that legs interact with environments, fingers interact with objects,” noted co-author Deepak Pathak, of Carnegie Mellon University. “The basic idea can be applied to any robot.”

Even further, Malik suggested, the pairing of basic and adaptive algorithms could work for other intelligent systems. Smart homes and municipal systems tend to rely on preexisting policies, but what if they adapted on the fly instead?

For now the team is simply presenting their initial findings in a paper at the Robotics: Science and Systems conference and acknowledge that there is a great deal of follow-up research to do. For instance building an internal library of the improvised gaits as a sort of “medium-term” memory, or using vision to predict the necessity of initiating a new style of locomotion. But the RMA approach seems to be a promising new approach for an enduring challenge in robotics.