Google’s new Pixel 2 phones boast the best smartphone camera ever tested by DxOMark. In this 9-minute video by Nat and Friends, learn how Google created this bar-raising camera. The video includes a number of interesting interviews with the Pixel 2 team.

Nat wanted to investigate how exactly the smartphone’s camera is so good, considering you have “about the size of a blueberry” worth of space for the whole camera to fit into.

The Pixel 2’s camera is a stack of 6 lenses, each of which helps combat aberration and distortions. When it focuses, the lenses move in and out of the camera body to adjust the image accordingly.

Google has even introduced image stabilization, and this works by moving those lenses left, right, up, and down to counter any movements you may introduce.

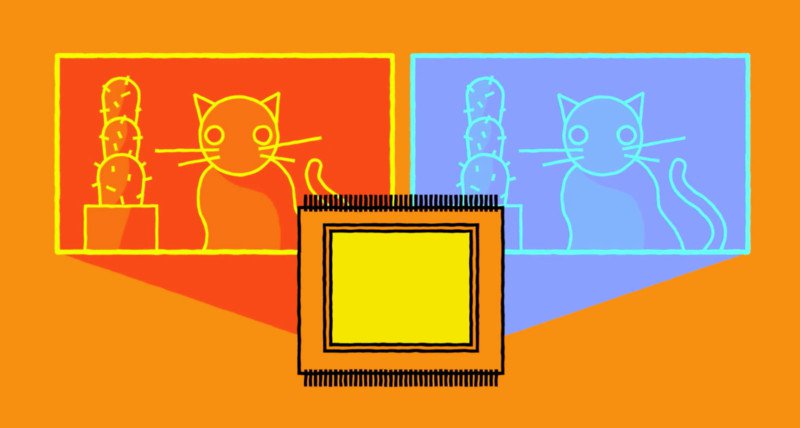

Each pixel on the sensor has a “left and right split,” something that gives the sensor greater capabilities for depth of field and autofocus. This means that the camera’s sensor has two images, from slightly different perspectives, of the world in front of it. Consequently, the Pixel 2 can create a depth map and allow for shallow depth of field effects in its “Portrait Mode.”

![]()

Then there’s HDR+, which uses an algorithm that allows the tiny sensor to “act like a really big one,” introducing greater dynamic range. It combines several photos together with different exposures like a standard HDR image, but HDR+ also looks to realign each frame to avoid ghosting.

The Pixel 2 even tries to go one step further by deciding exactly how much of an “HDR look” each image should get. For example, when shot in a darker scene, HDR+ won’t just brighten the image so it looks like it was shot in daylight. This means you won’t get that horribly fake, overly-processed look that comes with some HDR shots.

![]()

Watch the full video above to learn more about how the Pixel 2’s camera works and how Google built it.