Facebook’s internal “Supreme Court” can’t set precedents, can’t make decisions about Facebook Dating or Marketplace, and can’t oversee WhatsApp, Oculus, or any messaging feature, according to the bylaws Facebook proposed today for its Oversight Board. It’s designed to provide an independent appeals process for content moderation rulings. But it will only be able to challenge content taken down, not left up, until at least later this year so it likely won’t be able to remove misinformation in political ads allowed by Facebook’s controversial policy before the 2020 election.

Oh, and this Board can’t change many of its own bylaws without Facebook’s approval.

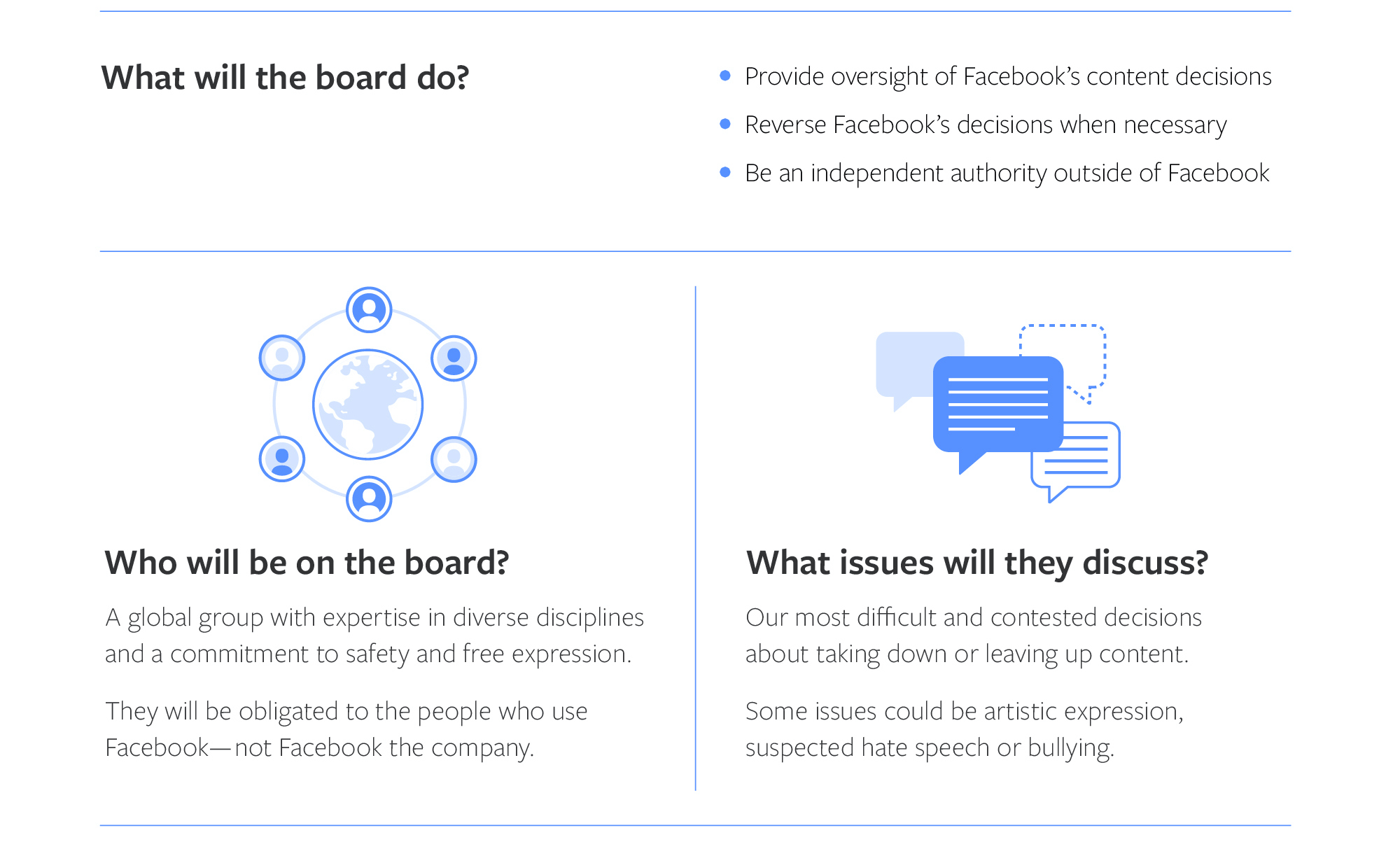

The result is an Oversight Board does not have deep or broad power to impact Facebook’s on-going policies — only to clean up a specific instance of a botched decision. It will allow Facebook to point to an external decision maker when it gets in hot water for potential censorship, differing responsibility.

That said, it’s better than nothing. Currently Facebook simply makes these decisions internally with little recourse for victims. It will also force Facebook to be a little more transparent about its content moderation rule-making, since it will have to publish explanations for why it does or doesn’t adopt the policy change recommendations.

But for Facebook to go to so much work consulting 2,200 people in 88 countries for feedback on its plans to create the Oversight Board, then propose bylaws that keep its powers laughably narrow, feels like an emblem of Facebook’s biggest criticisms: that it talks a big game about privacy and safety, but its actions serve to predominantly protect its power and control over social networking.

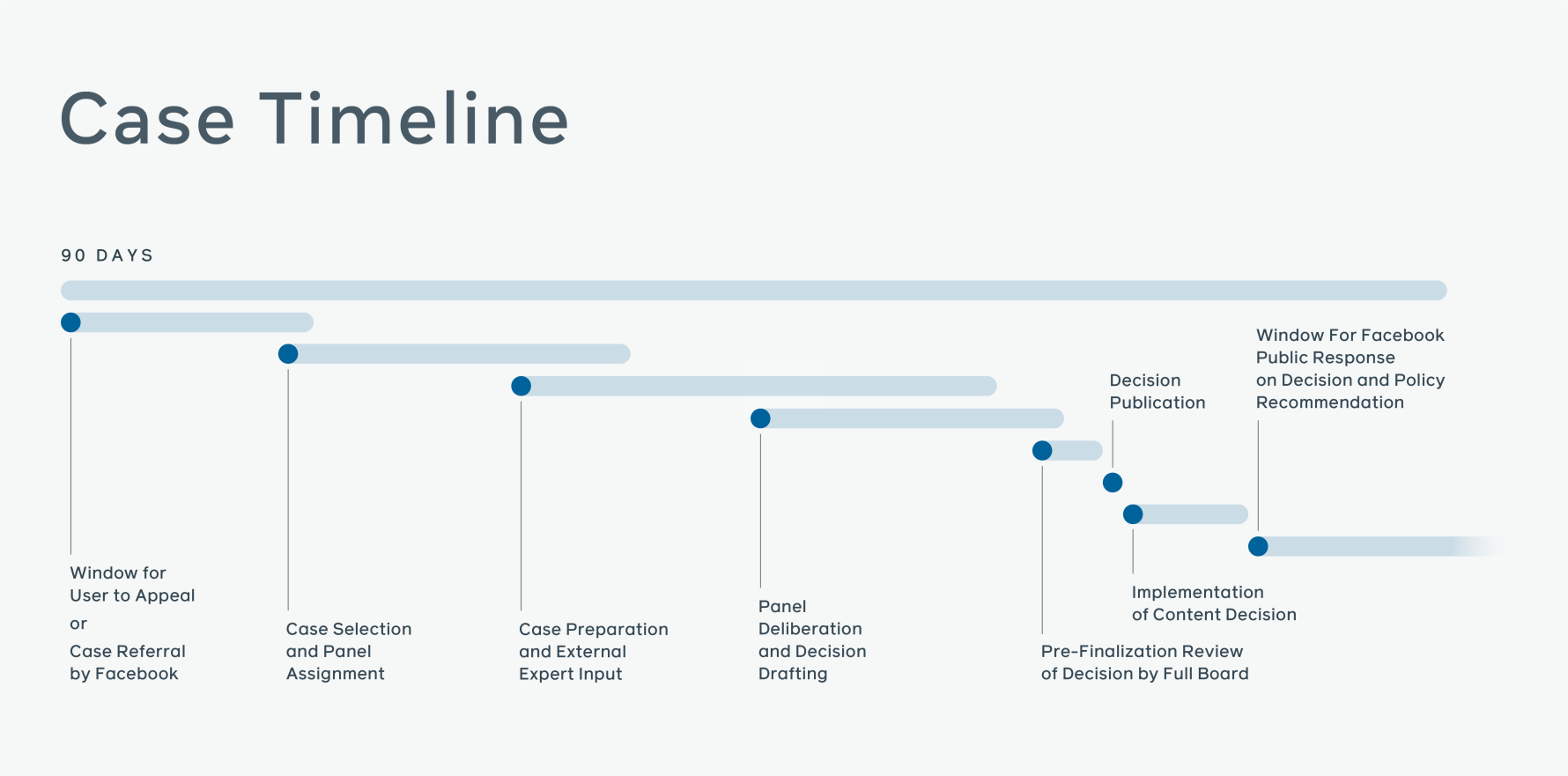

For starters, the Board is funded for six years with an irrevocable $130 million from Facebook, but it could let it expire after that. Decisions can take up to 90 days to make and 7 days once made to be implemented, so the Board isn’t designed for rapid response to viral issues.

Without Facebook’s approval, the Board can amend only Article 1 (Oversight Board) sections 1 (Membership), 2 (Administration), and 4 (Transparency and Communications; and Article 4 (The Trust). That leaves out the important Article 1, section 3 (Case Review and Decisions) and more, meaning it can’t alter what kinds of cases are submitted, picked up for decision, or what those decisions means.

One major issue is that Facebook is choosing the co-chairs of the Board who will then pick the 40 initial Board members who’ll choose the future members. Facebook has already picked these co-chairs but won’t reveal them until next month. A controversial or biased co-chair could influence all future decisions of the Board by choosing its membership. We also don’t know if Facebook asked candidates for the co-chair positions about their views on issues like misinformation in political ads and if that influenced who was offered the position.

You can expect a lot of backlash if Facebook chooses an overtly liberal or conservative co-chair or one firmly aligned and opposed with the current presidential administration. That will be rightful, considering a single co-chair more motivated by politics than what’s right for Facebook’s 2 billion users could have disatrous implications for its content policies.

In one of the most worrying quotes I’ve ever seen from a Facebook executive in 10 years of reporting on the company, VP of global policy Nick Clegg told Wired’s Steven Levy that “We know that the initial reaction to the Oversight Board and its members will basically be one of cynicism—because basically, the reaction to pretty well anything new that Facebook does is cynical.”

So Clegg has essentially disarmed all criticism of Facebook and crystallized the company’s defensive stance…which is one of pure cynicsm. He’s essentially saying “Why should we listen to anyone. They hate anything we do.” That’s a dangerous path, and again one that embodies exactly what society is so concerned about: that one of the most powerful companies in the world in charge of fundamental communications utilties actually doesn’t care what the public has to say.

Clegg also emphatically told Wired that the Board won’t approach the urgent issue of misinformation in political ads before the 2020 election because the Board needs time to “find its feet”. Not only does that mean the Board can’t rule on perhaps the most important and controversial of Facebook’s policies decisions until the damage from campaign lies is done. It also implies Clegg and Facebook have the ability to influence what cases the Board doesn’t look at, which is exactly what the purported autonomy of the Board is meant to prevent.

In the end, while the Oversight Board’s decisions on a specific piece of content are binding, Facebook has lee-way when deciding whether to apply it to similar existing pieces of content. And in what truly makes the Board toothless, Facebook only has to take the Board’s guidance on changes to policy going forward under consideration. The Board can’t set precedents. Recommendations will go through Facebook’s “policy development process” and receive “thorough analysis”, but Facebook can then just say ‘nope’.

I hope the chosen co-chairs and eventual members refuse to ratify this set of bylaws without changes. Facebook initial intention for the Oversight Board seemed sound. Some decisions about how information flows in our society should be bigger than Mark Zuckerberg. But the devil in the details say he still gets the final say.

[Correction 4:05pm pacific: We originally published that the Board can’t amend its bylaws without Facebook’s approval. In fact, the Board can’t amend some critical bylaws such as those about Case Review and Decisions without approval, but it does have power over membership and transparency.]