New applications are driving demand for faster and more efficient vision processing. (Source: Ceva)

The Hot Chips conference, now in its 28th year, is known for announcements of “big iron” such as the Power and SPARC chips behind some of the world’s fastest systems. But these days the demand for processing power is coming from new places. One of the big ones is vision processing, driven by the proliferation of cameras; new applications in cars, phones and all sorts of “things;” and the rapid progress in neural networks for object recognition. All of this takes a lot of horsepower, and at this week’s conference, several companies talked about different ways to tackle it.

Perhaps the most newsworthy was Nvidia’s announcement of Parker, its next-generation Tegra processor for premium cars with self-driving features. Manufactured by foundry TSMC on a 16nm process with 3D FinFET transistors, Parker has two CPU clusters (two of Nvidia’s custom 64-bit Denver 2 cores and four off-the-shelf ARM Cortex-A57 cores) and is the first Tegra chip with the company’s latest Pascal graphics. Parker is capable of 1.5 teraflops at half-precision (FP16), according to a company blog post. At the conference, Nvidia also showed how performance, measured on the SPECint_2000 benchmark, compared to the Apple A9x, Qualcomm Snapdragon 820, Samsung Exynos M1, and HiSilicon Kirin 935.

Nvidia’s results using a standard test suggest that Parker can outperform some of the most powerful mobile processors. (Source: Nvidia)

But Parker isn’t meant for phones. Rather it’s meant to power the next generation of vehicles with self-driving features and it includes a number of specialized features. It supports up to a dozen cameras, can decode and encode 4K video at up to 60 frames per second with Gigabit Ethernet-AVB to move audio and video streams around the car, has full hardware virtualization for up to 8 VMs to handle multiple car functions safely and includes a dual-CAN (controller area network) interface to connect to the numerous electronic control units. It is also Nvidia’s first automotive-rated (ISO-26262) SoC with resiliency features and an on-die safety manager.

Earlier this year, Nvidia announced the Drive PX 2 module, which has two Parker SoCs (12 CPU cores) and two discrete GPUs delivering a total of 8 teraflops of single-precision (FP32) performance. To put that in perspective, the GeForce GTX 1080, Nvidia’s fastest desktop graphics card aside from the $1,200 Titan X, is capable of around 9 teraflops FP32. Nvidia says that more than 80 customers are using the Drive PX 2 module to develop autonomous driving features, and Volvo has said they will use it to test two self-driving XC90 SUVs starting next year.

There’s no doubt that the Drive PX 2 is one of the most powerful automotive systems. But other companies claim that DSPs (digital signal processors) can do with milliwatts what takes several watts or more on a GPU.

Movidius talked about the need for more energy-efficient processing on the edge for inferencing, or running models for object recognition and other tasks. “If you have a self-driving car, you simply can’t tolerate the latency required to go out to the cloud for processing,” CTO David Moloney said. At Hot Chips Movidius was demonstrating its tiny Myriad 2 Vision Processing Unit in the DJI Phantom 4 drone, as well as performing object recognition, and simultaneous localization and mapping (SLAM). Lenovo recently announced it will use Myriad 2 in future VR products and FLIR has added the VPU to its thermal imaging camera. Movidius showed some performance and efficiency results for Myriad 2 versus unidentified GPUs running GoogleNet, a 22-layer deep neural network used in the 2014 ImageNet contest.

In comparison to GPUs, Myriad 2 can deliver higher performance while using less power when running neural nets for object recognition, according to Movidius. (Source: Movidius)

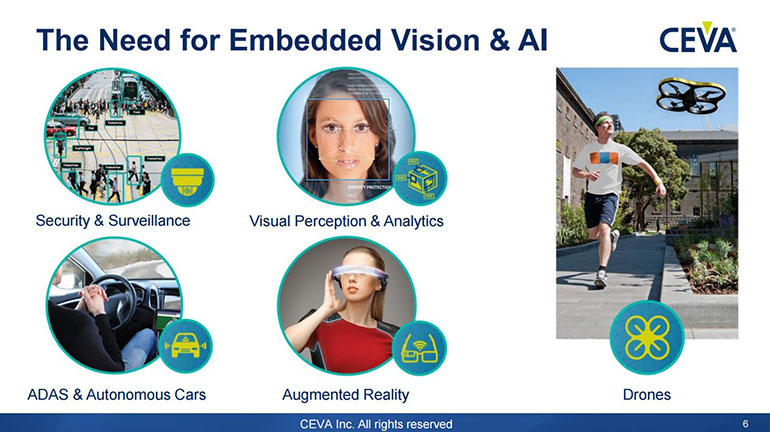

Ceva and Cadence license their DSP cores for use in customers’ chip designs. The Ceva-XM4 is a high-performance vision DSP capable of reaching speeds of up to 1.5GHz on TSMC’s 28nm HPM process and designed for embedded vision and AI in areas such as surveillance cameras, ADAS and autonomous driving, augmented reality, and drones. The company claims that its Ceva Deep Neural Network 2 (CDNN2) software can optimize any neural network built in the popular Caffe or TensorFlow frameworks so that it can run on the DSP. Ceva demonstrated the Ceva XM-4 running the AlexNet library doing object recognition in real-time using milliwatts of power. Like Nvidia’s Parker, the XM-4 is certified for automotive use, and Ceva said there are more than 15 chip designs in the works from Rockchip, Novatek, Brite, Inuitive, and others.

The latest addition to Cadence’s Tensilica line of vision processors, the Vision P6, delivers two to four times the performance on convolutional neural networks thanks to additional MACs (multiply-accumulate) support for half- and single-precision floating-point (as well as 8-, 16- and 32-bit fixed-point data), and enhanced memory parallelism and data movement. Cadence demonstrated the Vision P6 recognizing traffic signs, people and faces. Announced earlier this year at the Embedded Vision Summit, the Vision P6 will be available starting in October.

One customer of Tensilica DSPs is Microsoft. In a keynote, Microsoft’s Nick Baker showed for the first time some details of the chips that power its HoloLens headset for “Mixed Reality” applications such as 3D design, communication and collaboration, gaming, online learning, product repair and maintenance, and online shopping. In addition to a 14nm Intel Atom Cherry Trail processor running Windows 10, HoloLens includes a custom Holographic Processing Unit (HPU 1.0) sensor hub, 2GB of RAM and 64GB of flash storage. Manufactured by TSMC on a 28nm process, the HPU has 24 Tensilica DSP cores and 8MB of cache, and is capable of processing one trillion floating-point operations per second. Baker said Microsoft tried offloading tasks to an on-die GPU, or using arrays of CPUs or image signal processors, but none of them provided the performance per watt of a hybrid solution combining programmable x86 CPU cores and fixed-function hardware with DSPs.

Digital signal processors may deliver better performance per watt for running neural networks at the edge. (Source: Cadence)

While Nvidia’s Tesla GPUs for servers have significantly reduced the time required to train models, leading to breakthroughs in accuracy, there is still lots of work to be done on the inferencing side. Autonomous vehicles, drones, augmented reality and virtual reality, video surveillance, and other smart devices will all require embedded processors that can handle lots of number crunching without using a lot of power. The vision processing technologies presented at Hot Chips this year suggest we’re getting close, and they should provide the building blocks for some exciting applications in the next few years.