Intel has made several acquisitions and revamped its roadmap for deep learning. The new portfolio extends from Knights Mill and Lake Crest (Nervana) for training neural networks to Xeons, Altera FPGAs and Movidius vision processors for running these models. (I wrote about several of these in a post last week.)

Now Intel has added another chip to the mix with the announcement of Loihi. This one, however, is a bit different. For starters, it isn’t part of the company’s AI Products Group, but rather Intel Labs, which spent about six years developing the test chip. In addition, Loihi has a completely different, “self-learning,” neuromorphic architecture with the potential to tackle a broader class of AI problems.

The concept of a computer that mimics the brain isn’t new–Caltech scientist Carver Mead began working on it in the 1980s and coined the term “neuromorphic”–but these have largely remained science projects with little commercial application. In an interview, Narayan Srinivasa, Senior Principal Engineer and Chief Scientist at Intel Labs, explained why the company chose to go down this path.

Moore’s Law scaling has allowed Intel to pack a lot more cores in a given area. (This week Intel announced its first mainstream desktop chips with six cores and began shipping the Core i9 chips with up to 18 cores.) But the truth is that many workloads can’t exploit all those cores, Srinivasa said, which has led to a phenomenon known as dark silicon. In other words, it simply isn’t efficient to light up all those transistors all the time. To address this, the industry needs both a more efficient architecture and complementary workloads that can take advantage of all these cores.

Intel and others have been inspired by the design of the brain because it is extremely efficient at what it does. The human brain has an estimated 100 billion neurons each with up 10,000 synaptic connections–or a total of some one quadrillion synapses–yet operates on less power than a lightbulb. Of course, neuromorphic chips can’t close to this scale yet. The 14nm Loihi test chip is organized into 128 clusters, each containing 1,024 neurons, for a total of around 130,000 neurons with 130 million synapses distributed across them.

But the chip operates on similar principles–at least to the extent that we understand how the brain works. When the pulses or ‘spikes’ sent to a neuron reach a certain activation level, it sends a signal over the synapses to other neurons. Much of the action, however, happens in the synapses, which are ‘plastic,’ meaning that they can learn from these changes and store this new information. Unlike a conventional system with separate compute and memory, neuromorphic chips have lots of memory (in this case SRAM caches) located very close to the compute engines.

There is no global clock in these spiking neural networks–the neurons only fire when they have reached an activation level. The rest of the time they remain dark. This asynchronous operation is what makes neuromorphic chips so much more energy efficient than a CPU or GPU, which is “always on.” The asynchronous technology has its roots in Fulcrum Microsystems, a company that Intel acquired way back in 2011 that developed it for Ethernet switch chips, but Srinivasan said it was just “screaming to be used in other technology.”

This is also what makes spiking neural networks a promising solution for other modes of learning. A GPU is well-suited for supervised learning because these deep neural networks can be trained offline using large sets of labeled data that can keep massive arrays busy. These models are then transferred to and run–a process known as ‘inferencing’–on CPUs, FPGAs or specialized ASICs. Neuromorphic chips can be used for supervised learning too, but because they are intrinsically more efficient, spiking neural networks should also be ideal for unsupervised or reinforcement learning with sparse data. Good examples of this include intelligent video surveillance and robotics.

Loihi is not the first neuromorphic chip. IBM’s TrueNorth, part of a longtime DARPA research project, is perhaps best known, but other efforts have included Stanford’s Neurogrid, the BrainScaleS system at the University of Heidelberg and SpiNNaker at the University of Manchester. These rely on boards with multiple chips–in some cases with analog circuits–that are trained offline. Intel says the same Loihi chip can be used for both real-time training and inferencing, and it learns over time, getting progressively better at what it does.”We are the only ones that can handle all of these modes of learning on a single chip,” Srinivasa said.

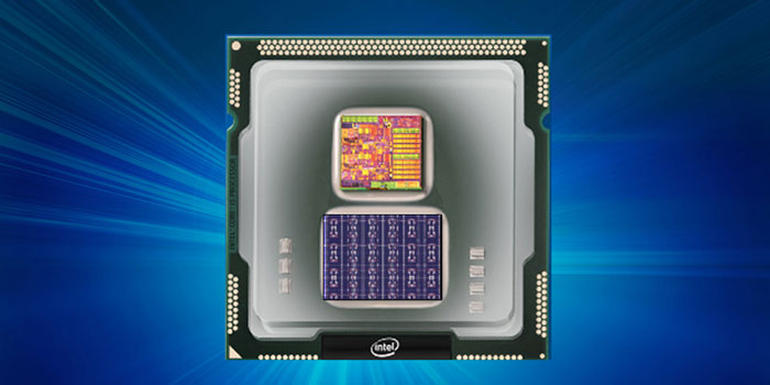

The all-digital design actually consists of two 14nm chips, a simple x86 processor that does a lot of the pre-processing (takes the data, encodes it in a format for spiking neural networks, and transmits it to the neuromorphic chip) and the neuromorphic mesh, both in the same package. Loihi is not a co-processor; the x86 chip will have a boot environment and lightweight OS and act as host, though the system-level details are still being worked out.

The first chips will be fabricated in November with plans to test them with “leading university and research institutions with a focus on advancing AI” in the first half of 2018. By that time, Intel also plans to complete a software kit that will make it easier to convert dataflow graphs to run as spiking neural networks for supervised, unsupervised and reinforcement learning.

With roughly the same number of neurons as in the brain of a mole, Loihi is a relatively small neuromorphic chip, but Intel says the architecture will scale easily taking advantage of the company’s advanced process technology. “There is nothing preventing us from doing a lot more neurons and synapses because they are all the same,” Srinivasa said.

But for now it remains a research project. Indeed the name Loihi may be a subtle message about how much work is still left to do. Located off the coast of the island of Hawaii, Lo’ihi is the only volcano in the Hawaiian seamount that is in the earliest stages of development. These submarine volcanoes go through cycles of eruption, lava buildup, and erosion for millions of years to form islands. Hopefully it won’t take that long to see the next big breakthroughs in AI.