A couple of years ago when I was working at Samsung, around the time the GearVR was being launched, there were lots of ideas flying around regarding the services to offer alongside the device.

Many (most) of these ideas were related to hosting 360 Video content, and our boss David Eun (ex-YouTube) often reminded everyone that YouTube will be the YouTube of VR. He meant that the content on this new VR platform gave a similar enough experience to existing video content, that a new service couldn’t compete with the incumbent. It turned out he was pretty much right.

I don’t think this is going to be the case for AR. I think there’s a genuine difference in that AR represents a new mass-medium, not a new form of a current medium.

This means that the iTunes of AR, quite possibly won’t be iTunes. It will be an AR-Native application that changes the way we experience art & entertainment. iTunes may adapt, but that’s not guaranteed at all…

So why is AR different to VR Video?

The difference is that VR Video is essentially the same old 2D video we know & love but super-duper wide screen (so wide we have to look sideways to see it all). It isn’t a new thing. AR is a new thing. For the first time, the media is experienced as part of the real-world. Context is the new attribute.

It’s multi-sensory, dynamic, interactive and now can be in my living room or the street and a change in context changes the experience entirely. To illustrate the difference, if you experience Star Wars in the cinema or even VR, you are “escaping” to another galaxy where you are immersed in that universe. But it’s been a real challenge to bring Star Wars to AR in a way that’s not a novelty, because you need to somehow deal with the cognitive dissonance of “why is R2D2 in my kitchen”?

The context changes the experience.

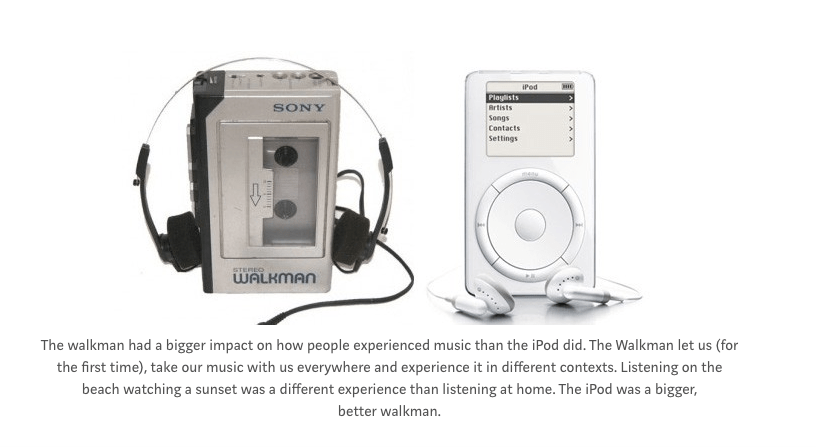

If we look at music, the way we experience music will potentially change due to AR in a similar way to the way music changed when recordings were invented, or the walkman. In one stroke the context in which we experienced music changed entirely. Inventions like the iPod, Radio or streaming didn’t really change the medium beyond adding quantity, but going from live to take-home (gramaphone) , or from at-home to out-and-about (walkman) changed the experience entirely because the context in which we experienced the music changed.

With AR, we’re going to experience music and art (static or interactive) in context with our lives. AR can adapt the “real-world” to let us inhabit the emotional landscapes we imagine when we hear our favorite track. Katy Perry fans may see their world literally become a little more neon when her music comes on. The bass line from a song that happened at a significant time of our lives may subtly play in the background when we are near that place or if similar contextual conditions are triggered (a date/time, or a person nearby?).

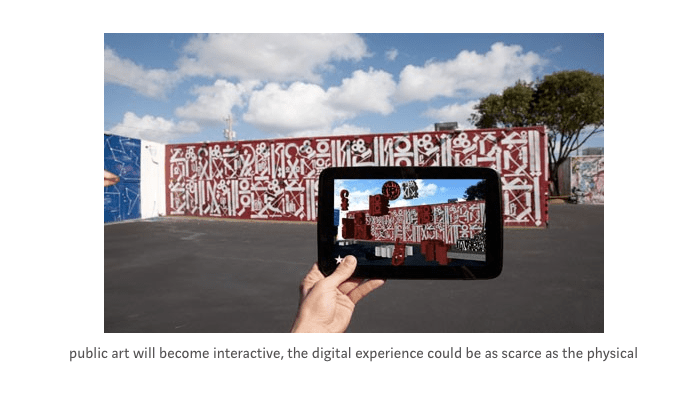

In a previous post I talked about how AR + Blockchain has the potential to re-enable scarcity in the digital world. This is one of the most powerful economic disrupters that AR will enable, and still very few people are thinking deeply about it. This could play out through certain types of digital street art starting out pixelized and only becoming “hi-res” after a certain number of people see it (or the reverse, and it can decay over time as more people see it, only the first 50 get the full experience… ). Digital paintings (or sculptures) can be cryptographically certified as a limited edition “original” and all copies are degraded slightly.

This is going to radically change the way we experience (and create) art.

What will it mean to have our music and visuals in-context?

When we think about music or art and context, there’s an example that we’ve all experienced. Compare the difference between listening to music at home vs sitting on a beach overlooking the sunset and choosing a track that’s perfect for that moment. That’s the way in which context is a part of the experience, and emotionally improves it, and in a small way the resulting experience is a collaboration between you & the artist.

AR takes this to a whole new level. An AR device will have a greater awareness of the real-world than any smartphone can have. This means that the ability to match (either automatically or manually) a song or image (or visual effect) to the moment, is far greater than just selecting a track from a playlist.

Artists are now able to give more control over to the audience, so the experience can be far more personal. This could involve the stems, connecting sounds or visuals from an album to individual objects or subtly chaging the ambience of an entire room (imagine an iPhoneX style face-mask, but applied to your walls & ceiling). We have added context in the past through making a mix-tape (async creation) or a Spotify playlist/feed (near real-time) but when the context becomes applied in real-time and is shared, which is native to AR, then new forms of collaborative expression emerge.

I believe it opens the door for a new type of Open Source movement to emerge. For many years a coder (or company) would write the code, and release it under their name. You could buy it and use it, but that was it. Open source meant that creators could publish both entire products as well as the components that made up those products. The GPL licence meant credit was recognized (though payment was problematic pre-bitcoin). Further, when great creators (both famous & anonymous coders) were able to create together building on each others work, far better products were invented. Today open source software underpins almost the entire Internet.

I believe it opens the door for a new type of Open Source movement to emerge. For many years a coder (or company) would write the code, and release it under their name. You could buy it and use it, but that was it. Open source meant that creators could publish both entire products as well as the components that made up those products. The GPL licence meant credit was recognized (though payment was problematic pre-bitcoin). Further, when great creators (both famous & anonymous coders) were able to create together building on each others work, far better products were invented. Today open source software underpins almost the entire Internet.

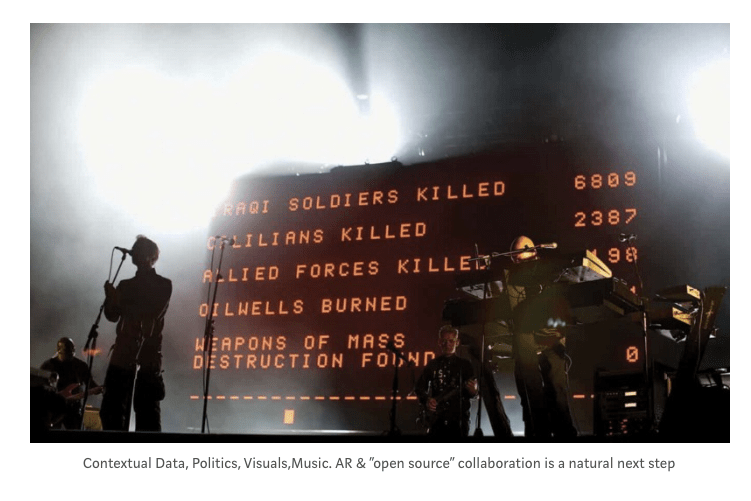

Today art and content are created in a very similar way to the closed source model. An artist or label/studio produces the product & we consume it the way we are told. There’s very little scope for the audience to apply their own context, or to reuse components into a reimagined expression (sampling being an exception, and again credit & payments are a challenge to say the least). User Generated Content web-platforms went part way towards making creation a 2-way collaboration, and AR can complete that journey.

From Art being a static “created & done” process only involving the artist(s), it now becomes a living process involving artist and audience. The process itself, the system or the code and the assets, becomes the art.

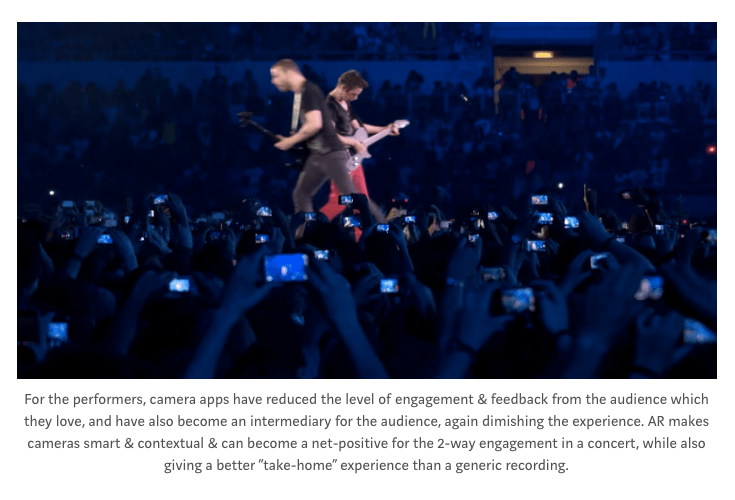

The one area today where the artist and audience almost create together is at a live concert. This is where the context of the audience, current social environment, weather etc all come together to make something unique and greater than the sum of its parts. The massive opporunity for AR is that today there is no way to connect with the audience digitally in that moment of a shared heightened emotional experience.

The crowd all use their generic camera apps, but that information is “single user”. The potential to use an AR-enabled smart-camera app, that can provide additional layers of context (live data feeds, responsiveness to what others in the crowd or remotely are doing, augmented stage shows etc) and then capture all the data around that, and package it into a take-home experience is extremely compelling.

The fact that real-world context is the defining characteristic of AR, when applied to art & content, it’s natural that the difference between the creator and the audience will be far less clear in the future. Like open source software, enabling creators to easily build on each others work will mean even greater things are created.

Who is going to figure this out?

I can tell you who wont figure it out, and that’s the marketing arms of labels and studios! Nearly everything I’ve seen so far regarding music/art in AR has been using the medium as a novelty-based marketing channel to promote “the real product” which is a YouTube view or Spotify listen. A Taylor Swift Snap filter, or volumetric video of a piano performance replayed in my bedroom is just trying to squeeze the old medium into the new, like enclyopedias publishing on the web before wikipedia came along. It’s dinosaurs facing the ice-age. What are the AR mammals?

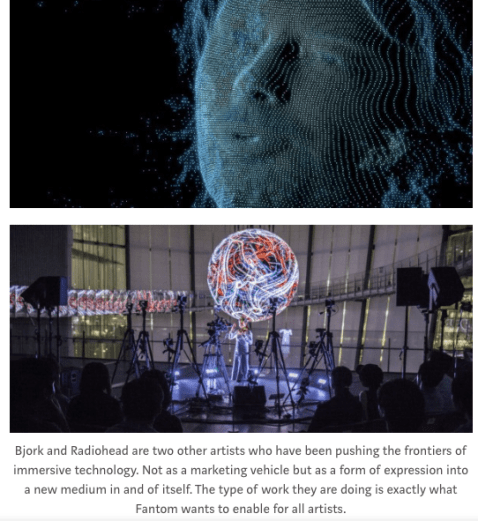

I think we’re going to see some rapid evolution as artists experiment directly in AR. The symbiosis between the artist and the toolmaker is going to be an incredibly important relationship over the next few years. I’m starting to see immersive toolmakers replacing steps of existing creative workflows, or artists struggling to express their AR ideas with crude tools, or (sadly) some high profile artists let their labels experiment for them. Occasionally I’ll meet someone like Molmol Kuo & Zach Lieberman from yesyesno who can both create the tools and the art, and they are doing ground breaking work.

Startups like Cameraiq.co are helping artists connect with new camera centric tools at both live events and in regular life. The GlitchMob in LA are also pushing new ideas around music and AR and fusing mediums. TiltBrush is finding cracks to escape from VR into AR. My wife Silka Miesnieks is also exploring this world of enabling creative and artistic expression through her work leading the Adobe Design Lab. It’s a cambrian explosion of ideas right now.

Fantom

There’s one startup that excites me more than all the others I’ve seen though, and that’s Fantom and Sons Ltd from the UK. In my opinion, they have all the pieces to natively adapt to this new medium. To me they look like the first mammal of the AR content/art eco-system.

Fantom launched a simple smartphone app last year that served as an experiment to validate some ideas around merging visual and audio components into an emotional sensory experience, and to support a preview of some new music. The company is still in semi-stealth mode, and will announce plans in some more detail soon, but I have some permission to share why Fantom excites me personally (without giving away any details of what’s coming in 2018!).

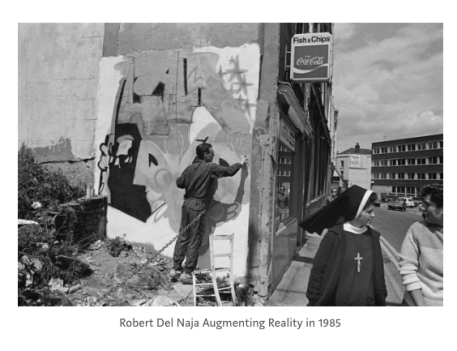

Fantom is co-founded by Robert Del Naja who is better known as a co-founder of Massive Attack.

Fantom is co-founded by Robert Del Naja who is better known as a co-founder of Massive Attack.

While celebrities getting into tech startups isn’t a new thing, what most interested me in what Fantom was setting out to achieve was that listening to Rob share his vision, it was clear that he truly was interested in the new medium and how new forms of artistic expression could be enabled, to tip the power balance of the industry to more directly connect artist to audience (and in fact blur the lines ). This isn’t a marketing exercise, and the whole team had a very mature & realistic strategy that was incredibly ambitious. AR isn’t a gimmick, but one key enabler, along with music stems, the blockchain and several others to build a product that could become a platform for this new medium, to enable all artists. Musicians, visual artists and coders.

The other dimension which gives credibility was that Rob’s career has been defined by amazing collaborations, minimal ego, strong political values and fusing different musical styles and mediums to create art that has resonated globally. The fact that visual art has also been a big part of Robs life since the beginning just adds to the natural fit between AR and Fantom. There’s a lot of interest from high profile musicians around AR, but to me many of them seem to be chasing the next shiny thing, while Fantom feels like a natural next step in a long and successful creative career. While Rob is the creative director of Fantom (and not in the Will.I.Am-at-Intel sense creative director, but actually hands-on creating himself and directing other amazing artists!), this isn’t a celebrity project, and Marc Picken, Andrew Melchior, Robert Thomas, Yair Szarf, The Nation and the whole team are building a startup to succeed with or without any one individual and to serve the entire industry. Fantom’s mission is to build the platform to enable artists to create great art in this new medium, ensuring all contributors are recognized without limiting creative expression. Massive Attack will just happen to be the first band to build on the platform.

While Rob is the creative director of Fantom (and not in the Will.I.Am-at-Intel sense creative director, but actually hands-on creating himself and directing other amazing artists!), this isn’t a celebrity project, and Marc Picken, Andrew Melchior, Robert Thomas, Yair Szarf, The Nation and the whole team are building a startup to succeed with or without any one individual and to serve the entire industry. Fantom’s mission is to build the platform to enable artists to create great art in this new medium, ensuring all contributors are recognized without limiting creative expression. Massive Attack will just happen to be the first band to build on the platform.

The list of collaborators and friends that are interested in working with this project ensures an amazing first few years are ahead. Far beyond Massive Attack. Not just in terms of guaranteed exposure, but in the ability of the worlds greatest visual and music artists to be hands-on defining this new greenfield medium, and new and emerging artists being able to work with the same tools and assets without restrictions. This isn’t going to be Tidal for AR, trying to succeed through celebrity licencing and commercial leverage, but instead a place for artists who want to define a genre to find like-minded collaborators and the best toolmakers to enable them.

There’s support from major industry partners coming together, including 6D.ai to help push out the limits of the AR experience. Fantom is actively looking for great technologists to work with (either coder-artists like Zach & Molmol to integrate their tools, or UK based engineers who may want to join the team full-time) and creative artists who want to create on the platform and explore what AR-Native means for their art.

AR as a new medium is both visual and auditory. There are very few world class artists who can do both

When a new medium emerges, like the Web did, it’s the artists who define the early interactions and successes, not the MBA’s. Fantom is special in that the coders are just as much a part of the artistic process as the musicians or painters.

2018 is going to be an exciting year. I’m excited as an entrepreneur at the prospect of dominant large companies like YouTube or Spotify becoming exposed to new forms of competition for peoples hearts and minds.

But I’m even more excitied that the way we experience the art and artists we love will be even more absorbed into our daily lives. Where once our “collaboration” with the artist was limited to choosing where to listen to a song, now it will be even more personal & powerful. The act of creating and experiencing the creations of others is one of the core aspects of being human that AI and Robots can’t replace. This is an area where AR truly can improve humanity and our lives will become richer through the work that we all can do on platforms like Fantom.

Thanks to Silka Miesnieks for help preparing this article